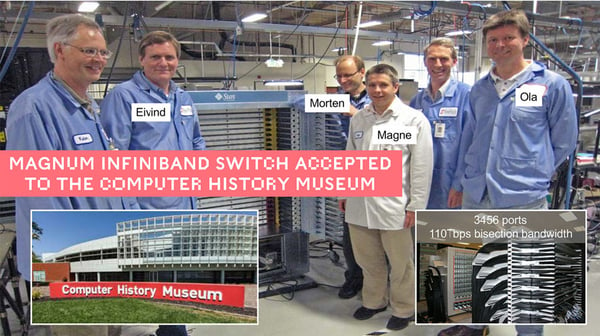

Ten years ago, a small team based in Oslo, Norway worked to develop the world’s highest performance networking switch, the Sun Microsystems Magnum. In June this year, the Computer History Museum, in Menlo Park, California chose this breakthrough technology product as an example of a milestone in the development of high-performance computing. Today, the same world class team is working at Graphcore where we are developing new switch and system technology that will allow cloud companies to deploy Intelligence Processing Units (IPUs), at Rackscale.

The Magnum switch our team developed, has a total of 3,456 ports and an unprecedented bi-section bandwidth of 110Tbps, making it the largest and highest performance networking switch ever built. Featuring a novel 5-Stage Clos Fabric, the Magnum switch is able to achieve a port-to-port latency of only 700ns. This level of performance is achieved through the use of an orthogonal mid-plane with 27,648 high-speed 20Gbps differential pairs, supporting 24 line-cards with a total 1152 physical 12-lane connectors, with each connector supporting three 4-lane ports. Housed in a double-width rack chassis, the Magnum switch delivered the lowest latency, high bandwidth Infiniband switching to the class-leading High Performance Computing (HPC) system of its day and ten years later it is still the world’s most scalable switch.

Today more than 85% of the Top 500 computer systems are built using its Clos Fat-Tree Network architecture. This technology enables a constant bisection bandwidth and equal latency per node. The innovative architecture developed by the Magnum team has become the de-facto standard for cloud fabrics used by Amazon AWS, Microsoft Azure, Facebook, Google and others. Magnum was the first major system that deployed reliable Software Defined Networking (SDN) at scale – and the technologies developed became standardized through Open-Fabrics (OFA) and the CXP connector standard (CXP). Magnum is a classic example of how disruptive innovation can shape the industry and drive technology in the broader market.

The Sun Magnum Switch was installed in 2007 at the Texas Advanced Computing Center (TACC) at the University of Texas in Austin, as part of the Ranger supercomputer system. This system connected tens of thousands of processors together into a blazingly fast high-speed interconnected network. Ranger featured a network of 62,976 processor cores packed into 15,744 quad-core microprocessors.

In 2008, the Ranger system was the first supercomputer available to researchers that could approach the Petascale performance mark at 579.4 Teraflops. The Ranger system has served data science research for over ten years. During its years of service it was used to improve hurricane prediction accuracy and helped to complete more than three million simulation experiments for over 4000 researchers.

Ten years ago, Magnum and HPC represented the forefront of computing; today the new frontier is Machine Intelligence.

Like HPC, Machine Intelligence exposes computational and communication challenges, but the requirements are completely different. Unlike traditional HPC, Machine Intelligence uses low-precision arithmetic and the knowledge models are naturally graph structured.

Graphcore's IPU is bringing an unprecedented level of performance to these machine learning workloads and is set to become the de-facto standard for Machine Intelligence computing. The communication patterns in Machine Intelligence workloads require features such as all-reduce, peer-to-peer, broadcast and low-latency synchronization. Huge parallelism is exposed and this means that low-latency synchronization is a key scaling metric. Likewise, scalable IO is required, for feeding training and inference data into the Machine Intelligence compute. In addition, data augmentation for image, video and speech is key for maintaining system performance.

Machine Intelligence requires a completely new approach for scale-out fabrics. The IPU´s innovative use of the Bulk Synchronous Parallel (BSP) computation model - well known in the HPC and parallel compute worlds - provides a key foundation for building Machine Intelligence Rackscale systems with 1000s of IPU’s. Fabric-based MI workload acceleration is another key element, as well as an ability to on-demand elastically pool IPUs into secure domains.

The Magnum switch represented an engineering tour-de-force that drove technology innovation for over a decade. It took us less than two years from architecture to operation to develop Magnum. Earlier this year the team behind Magnum joined Graphcore and together we have set out to create the world’s most scalable and highest performance Machine Intelligence solution.

We are building out our team at our new development center in Oslo, Norway where we are developing the future of Machine Intelligence networking and Rackscale systems. If you are interested in joining us, please check out our job openings in Oslo at graphcore.ai/careers.