POPLAR GRAPH FRAMEWORK SOFTWARE

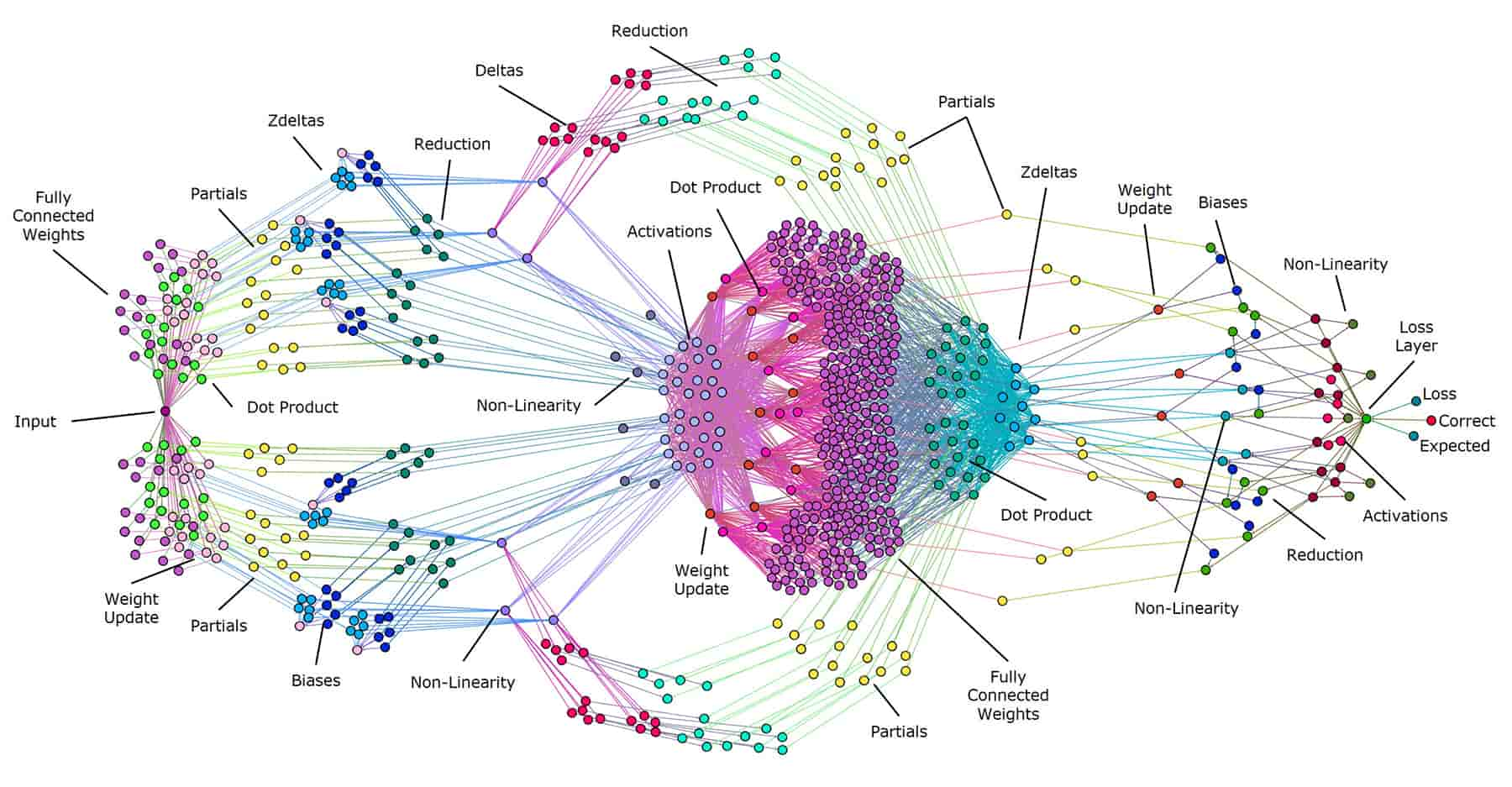

Co-designed with the IPU from the ground up for machine intelligence

Speak to an Expert

Click to Zoom

Click to Zoom

Click to Zoom

Click to Zoom

Click to Zoom

Click to Zoom

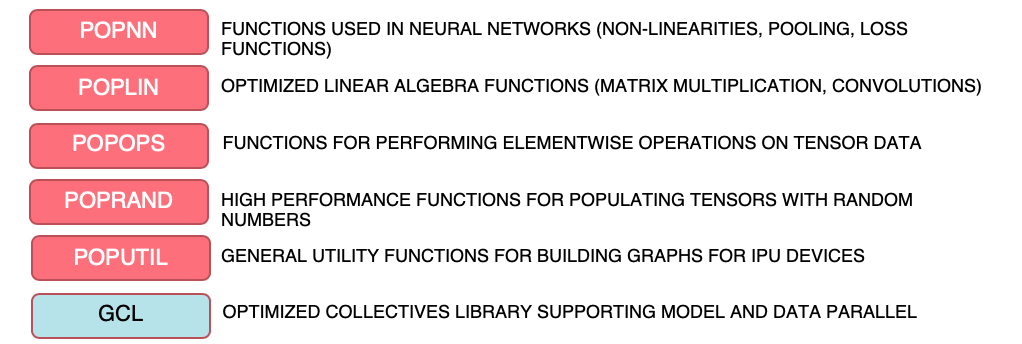

At Graphcore we put power in the hands of AI developers allowing them to innovate. Poplar Graph Libraries (PopLibs) are fully open source and available on GitHub to allow the entire developer community to contribute to and enhance these powerful tools.

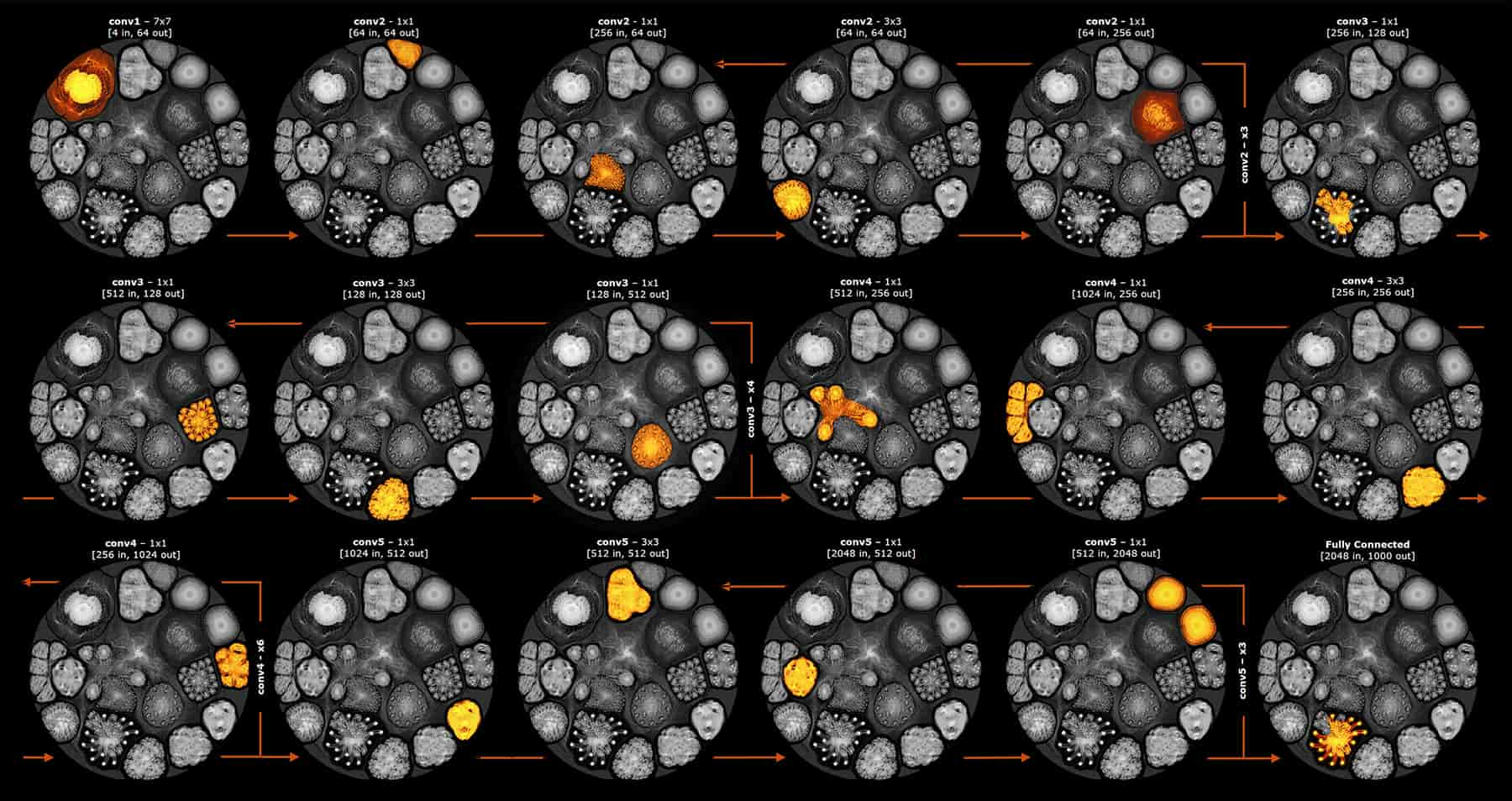

Read the blogThe PopVision™ family of analysis tools help developers gain a deep understanding of how applications are performing and utilising the IPU. Get a deep understanding of your code's inner workings with a user-friendly, graphical interface.

Read the blogPre-built Docker Hub containers with Poplar SDK, Tools and frameworks images to get up and running fast.

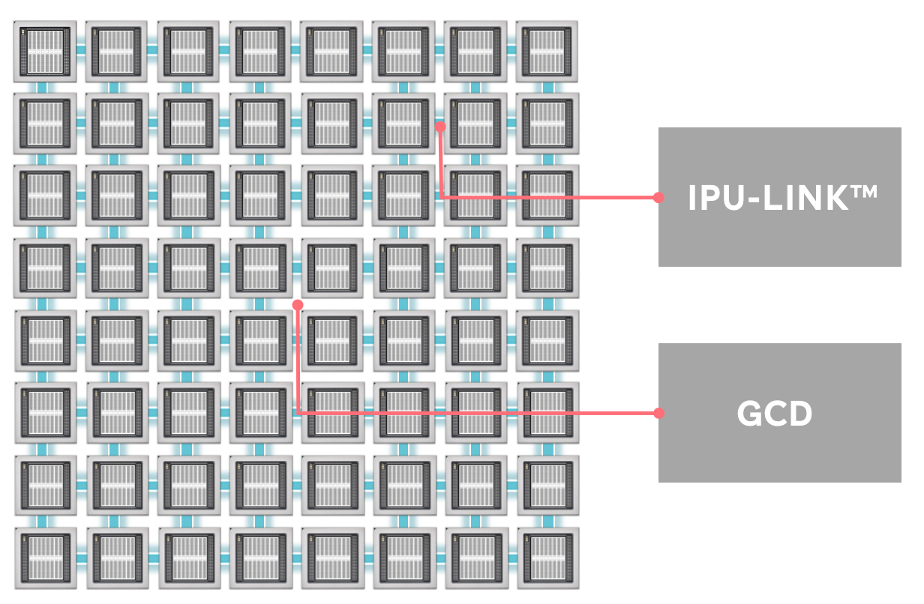

Ready for production with Microsoft Azure deployment, Kubernetes orchestration and Hyper-V virtualisation & security.