Graphcore today unveiled the world’s first 3D Wafer-on-Wafer processor - the Bow IPU - which is at the heart of our next generation Bow Pod AI computer systems, delivering up to 40% higher performance and 16% better power efficiency for real world AI applications than its predecessors, all for the same price and requiring no changes to existing software.

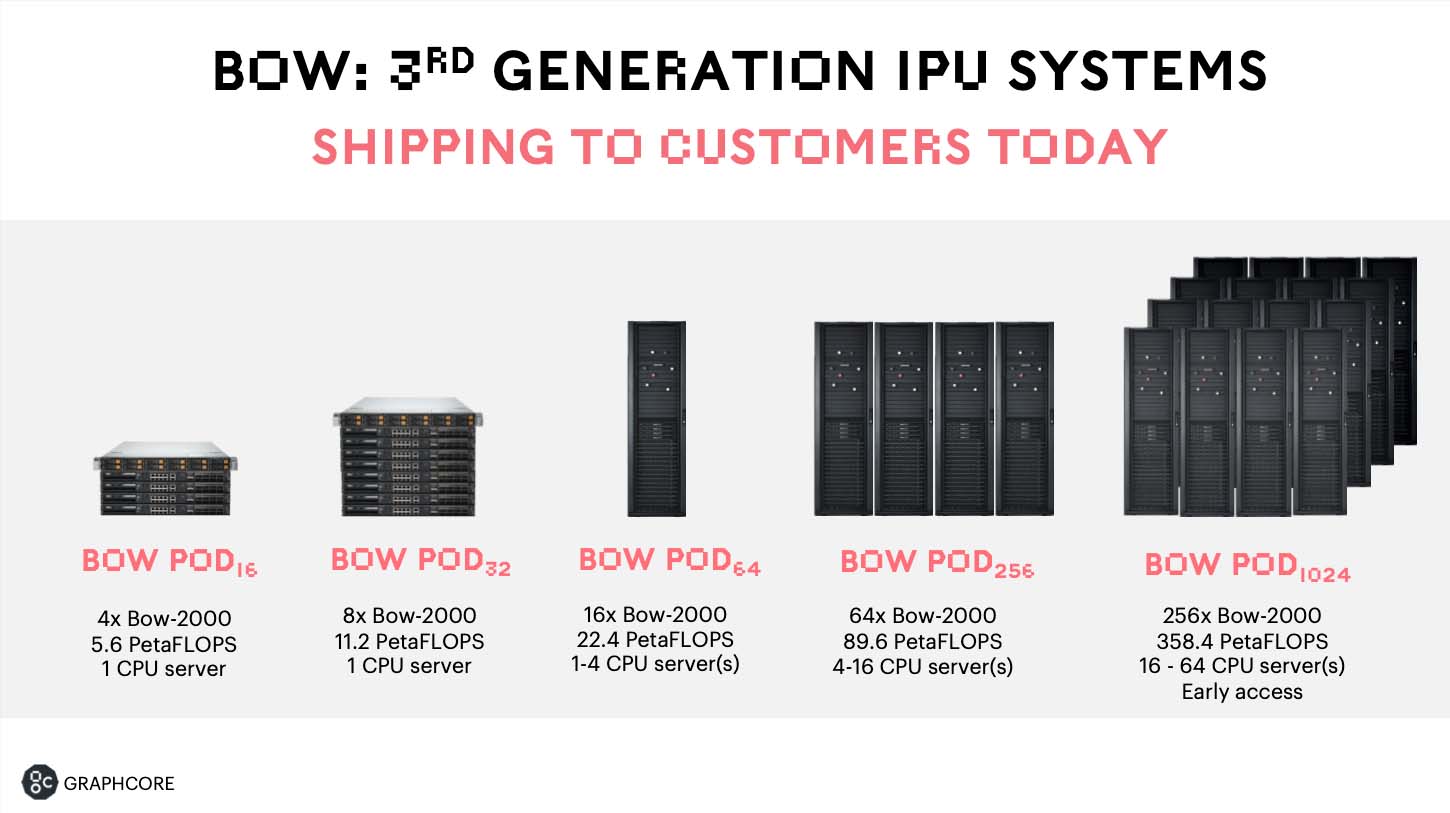

The flagship Bow Pod256 delivers more than 89 PetaFLOPS of AI compute, while the superscale Bow POD1024 packs 350 PetaFLOPS of AI compute, allowing machine learning engineers to stay ahead of the exponentially growing size of AI models and to make new breakthroughs in machine intelligence.

Customer success

Our new Bow Pod systems are available now and have begun shipping worldwide.

One of the first customers to take advantage of Bow’s improved performance and efficiency will be the U.S Department of Energy’s (DOE) Pacific Northwest National Laboratory. (PNNL) for applications including cybersecurity and computational chemistry.

“At Pacific Northwest National Laboratory, we are pushing the boundaries of machine learning and graph neural networks to tackle scientific problems that have been intractable with existing technology,” said Sutanay Choudhury, deputy director of PNNL's Computational and Theoretical Chemistry Institute.

“For instance, we are pursuing applications in computational chemistry and cybersecurity applications. This year, Graphcore systems have allowed us to significantly reduce both training and inference times from days to hours for these applications. This speed up shows promise in helping us incorporate the tools of machine learning into our research mission in meaningful ways. We look forward to extending our collaboration with this newest generation technology.”

US cloud service provider, Cirrascale, is making Bow Pod systems available today to customers as part of its Graphcloud IPU bare metal service, while European cloud service provider G-Core Labs has announced that it will be launching Bow IPU cloud instances in Q2 2022.

Real results

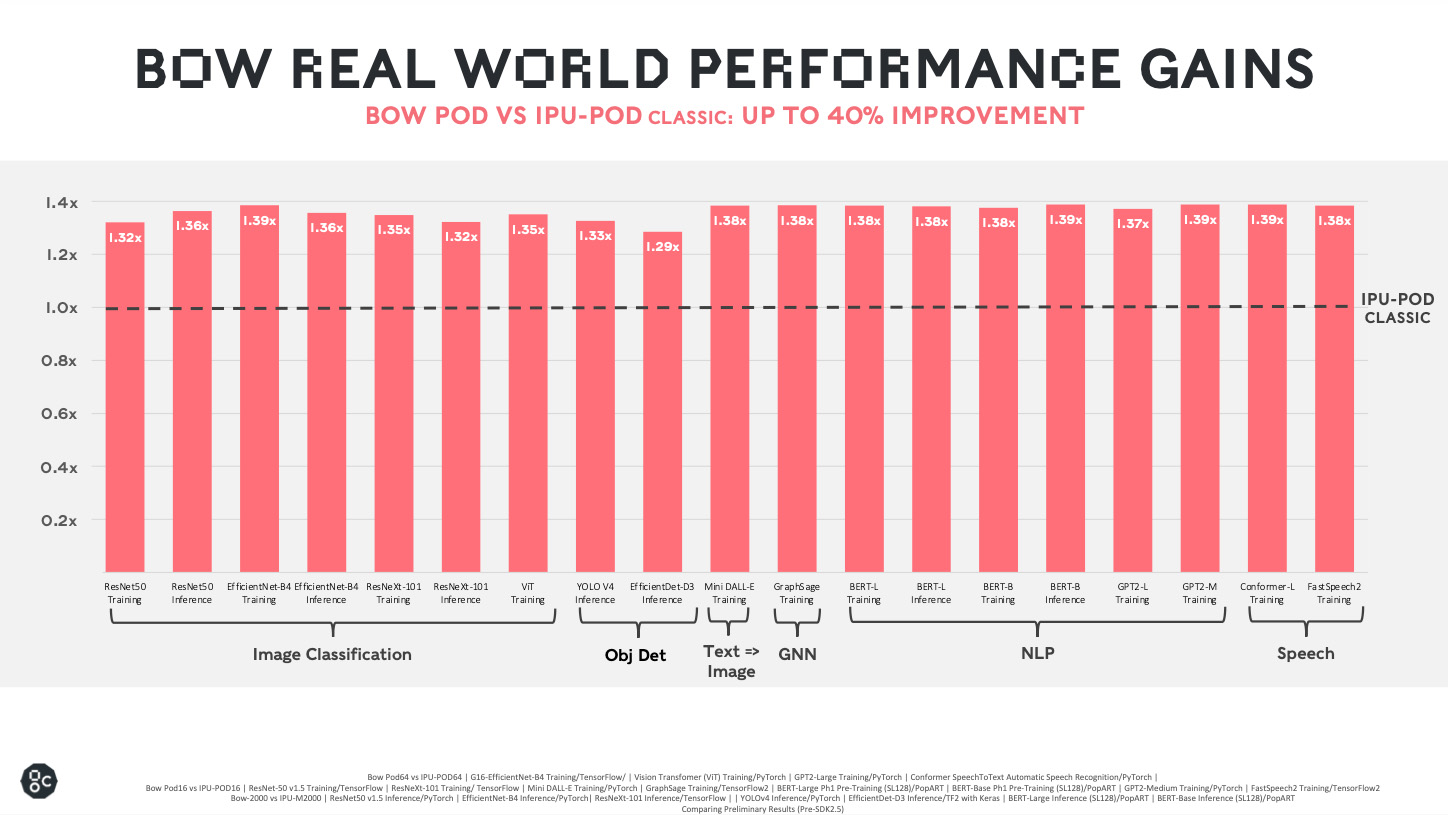

Bow Pods are all about delivering real-world performance, at scale, for a wide range of AI applications - from GPT and BERT for natural language processing to EfficientNet and ResNet for computer vision, to graph neural networks and many more.

Customers are seeing up to a 40% increase in performance for a wide range of AI applications within the same peak power envelope as our Mk2 IPU-Pod systems with Bow Pod systems.

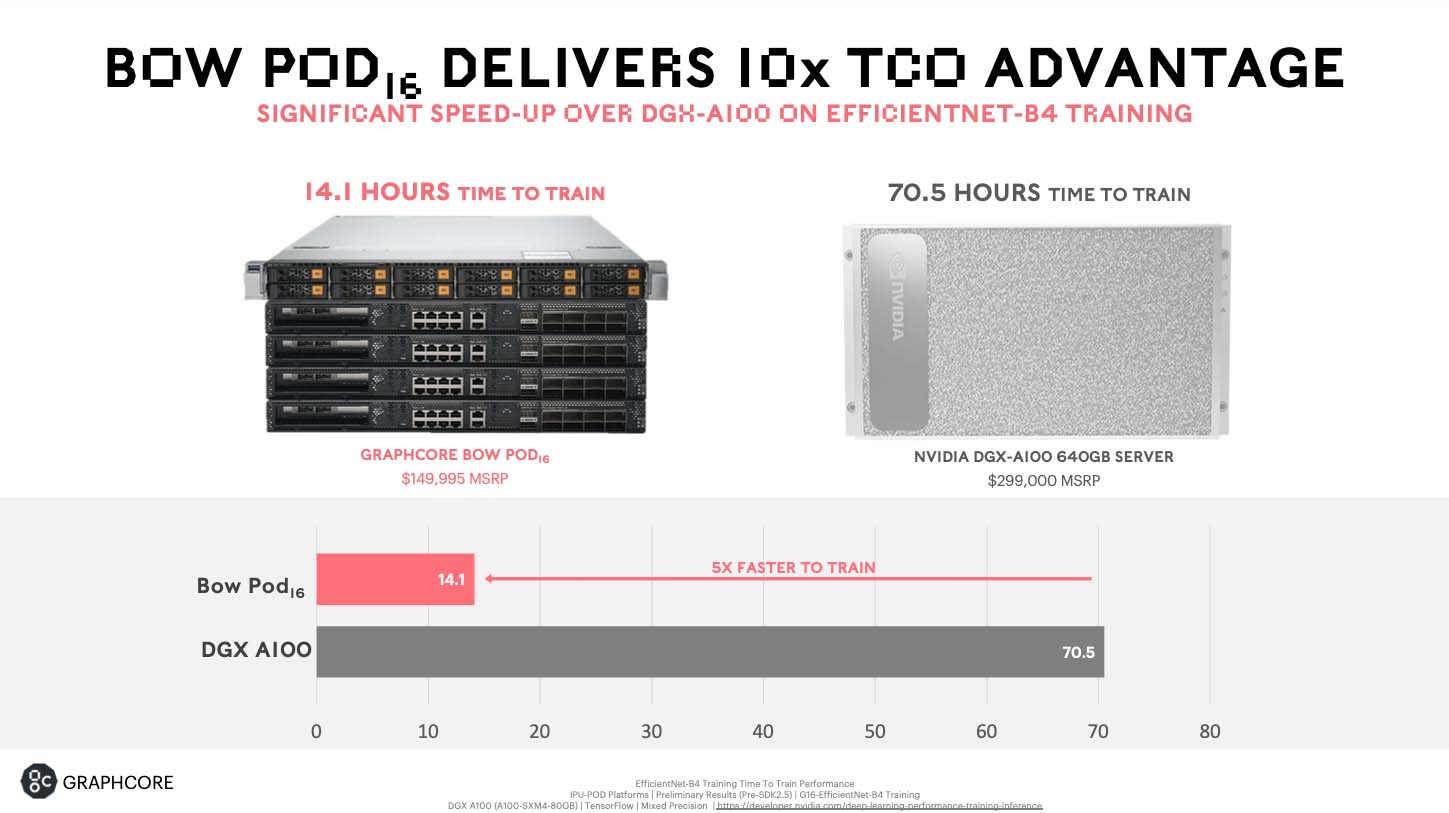

Bow Pod16 delivers over 5x better performance than a comparable Nvidia DGX A100 system, and at half the price, resulting in up a 10x TCO advantage, for state-of-the-art computer vision model EfficientNet.

Improved power efficiency

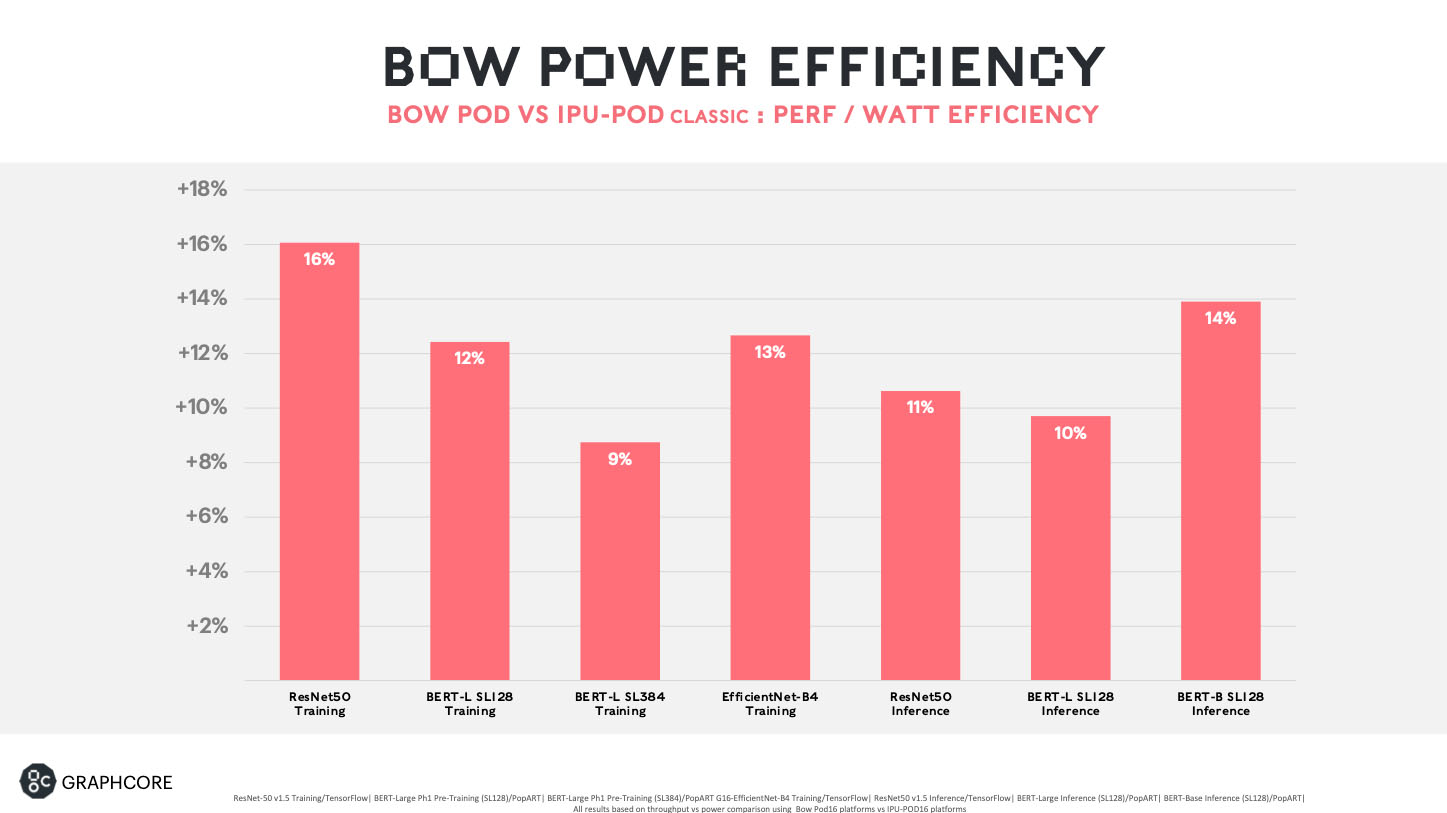

In addition to up to 40% performance gains, Bow Pod systems are also significantly more power efficient than their predecessors.

Tested across a range of real world applications, Bow Pods show an improved performance-per-Watt of up to 16%.

The WoW factor

Our Bow Pod systems deliver this giant performance boost and improved power efficiency thanks to the use of a world first in 3D semiconductor technology in the new Bow IPU processor.

We are proud to be the first customer to market with TSMC’s Wafer-on-Wafer 3D technology, which we have developed in close partnership with them. Wafer-on-Wafer has the potential to deliver much higher bandwidth between silicon die and is being used to optimise power efficiency and improve power delivery to our Colossus architecture at the wafer level.

With Wafer-on-Wafer in the BOW IPU, two wafers are bonded together to generate a new 3D die: one wafer for AI processing, which is architecturally compatible with the GC200 IPU processor with 1,472 independent IPU-Core tiles, capable of running more than 8,800 threads, with 900MB of In-Processor Memory, and a second wafer with power delivery die.

By adding deep trench capacitors in the power delivery die, right next to the processing cores and memory, we are able to deliver power much more efficiently – enabling a 40% increase in performance, meaning each processor now delivers 350 TeraFLOPS of AI compute.

Working closely with TSMC, we have fully qualified the entire technology including a number of ground breaking technologies in the Back Side Through Silicon Via (BTSV) and Wafer-on-Wafer (WoW) hybrid bonding.

“TSMC has worked closely with Graphcore as a leading customer for our breakthrough SoIC-WoW (Wafer–on-Wafer) solution as their pioneering designs in cutting-edge parallel processing architectures make them an ideal match for our technology,” said Paul de Bot, general manager of TSMC Europe.

“Graphcore has fully exploited the ability to add power delivery directly connected via our WoW technology to achieve a major step up in performance, and we look forward to working with them on further evolutions of this technology.”

Trusted technology

Our customers are leaders in their fields, who need computer systems that combine performance, efficiency and reliability. Anyone already using IPUs will find the transition to Bow Pod systems seamless.

Our powerful, easy-to-use Poplar software stack and ever-expanding library of IPU-optimized models, automatically unlock the full capabilities of Bow Pod systems.

The new Bow-2000 IPU Machine - the building block of every Bow Pod system – is based on the same robust system architecture as our second generation IPU-M2000 machine but now with four powerful Bow IPU processors, delivering 1.4 PetaFLOPS of AI compute.

Fully backward compatible with existing IPU-POD systems, the Bow-2000's high speed, low latency IPU fabric, and flexible 1U form factor all stay the same.

Combined with a choice of host servers from leading brands including Dell, Atos, Supermicro, Inspur and Lenovo, to form Bow Pod systems, the Bow-2000 is the fundamental building block for our full Bow Pod family, from Bow Pod16, with four Bow-2000 and one host server, through Bow Pod32 (8 Bow-2000 and one host server), to Bow Pod64 and our larger flagship systems, the Bow Pod256 and Bow Pod1024.

Availability

Availability

Bow Pod systems are available immediately from Graphcore’s sales partners around the world.

Here are what some of our partners had to say about the arrival of Bow Pods:

Cirrascale Cloud Services

“Cirrascale’s Graphcloud is giving many AI innovators their first experience of what Graphcore’s IPU can do, as well as providing a flexible scale-up platform for those who need to expand their compute capability. The addition of Bow Pods to Graphcloud takes AI computing in the cloud to new levels of performance – whether that’s used to accelerate massive models across large Pod configurations, or to put more power in the hands of individual users in multi-tenancy setups.”

PJ Go, CEO, Cirrascale Cloud Services

G-Core Labs

“For G-Core Labs customers, performance means progress: Graphcore IPUs let them develop and deploy their AI models faster and reach results that benefit their business sooner. The increase in compute power delivered by Bow Pods is going to supercharge innovation in artificial intelligence, while their easy availability on G-Core Labs cloud ensures that the opportunity is accessible to all.”

Andre Reitenbach, CEO, G-Core Labs

Atos

“Graphcore’s Bow POD systems set a new standard in artificial intelligence compute that will allow Atos customers to push their AI innovation further and achieve results faster than ever before. As models grow in size and complexity, Graphcore and Atos work together to deliver Exascale systems. At the same time, Graphcore’s relentless focus on computational efficiency ensures that users are getting the best possible return on their AI investment.”

Agnès Boudot, Head of HPC & Quantum, Atos