Today, we are excited to announce that VMware’s Project Radium will support Graphcore IPUs as part of its hardware disaggregation initiative. This will enable pooling and sharing of IPU resources over the primary datacenter network in virtualized, multi-tenant environments without pushing complexity to the user or management software. The network disaggregated scale-out architecture of the IPU-PODs coupled with flexible resource management features in Project Radium will unlock new frontiers in training very large models at scale and deploying models in reliable production environments for AI-based services.

VMware is the leading provider of enterprise virtualization software and tools for application modernization in the cloud. Through products like vSphere, Tanzu and NSX, VMware offers a diverse set of capabilities to customers in their application building and modernization journey. Project Radium is another feather in the cap in this impressive lineup of products.

A closer look at the IPU

The IPU is a new type of parallel processor designed with a keen focus on addressing the computational requirements of modern AI models. The IPU has a high degree of fine-grained parallelism at the hardware level; it supports single and half precision floating point arithmetic and is ideal for sparse compute without taking any specific dependency on sparsity in the underlying data. The processor is ideal for both training and inference of deep neural networks, which are the workhorse of contemporary ML workloads.

Instead of adopting a conventional SIMD/SIMT architecture like GPUs, the IPU uses a MIMD architecture with ultra-high bandwidth, on-chip memory and low-latency/high-bandwidth interconnects for efficient intra- and inter- chip communications. This makes IPUs an ideal target to parallelize machine learning models at datacenter scale.

IPU-PODs and the power of disaggregation

Scaling out from one to thousands of IPUs is seamless, thanks to IPU-POD architecture. IPU-PODs are network-disaggregated clusters of IPUs that can scale elastically based on workload needs and independently of CPU resources that they are connected to, over the network. This allows users to dial up or down the CPU:IPU ratio in hyperscale or on-prem enterprise environments through simple resource binding constructs. The IPU-POD architecture also enables near bare-metal performance in virtualized environments.

The flexibility offered by this independent scalability of CPU and IPU resources helps users meet workload specific demands on compute resources in a cost optimized manner. As an example, ML models for natural language processing tasks are generally not CPU intensive whereas computer vision tasks can be CPU intensive due to tasks such as image pre-processing or augmentation. This can be especially useful in cloud environments wherein spinning up and down CPU resources is easy and allows customers to reap the benefits of economies of scale.

Software considerations

Graphcore’s Poplar SDK has been co-designed with the processor since Graphcore’s inception. It supports standard machine learning frameworks including PyTorch and TensorFlow, as well as container, orchestration and deployment platform technologies such as Docker and Kubernetes.

Besides support for core machine learning software frameworks, integration with virtualization, orchestration and scheduling software is crucial for customers to easily use IPUs at scale in enterprise environments. Multi-tenancy, isolation and security are key tenets that solution providers need to adhere to while operating in hyperscale environments. Resource management components in Graphcore’s software stack facilitate easy integration with a variety of cloud provisioning and management stacks such as the one offered by VMware. As a result, operating in public cloud, hybrid cloud or on-prem infrastructure environments becomes frictionless.

About Project Radium

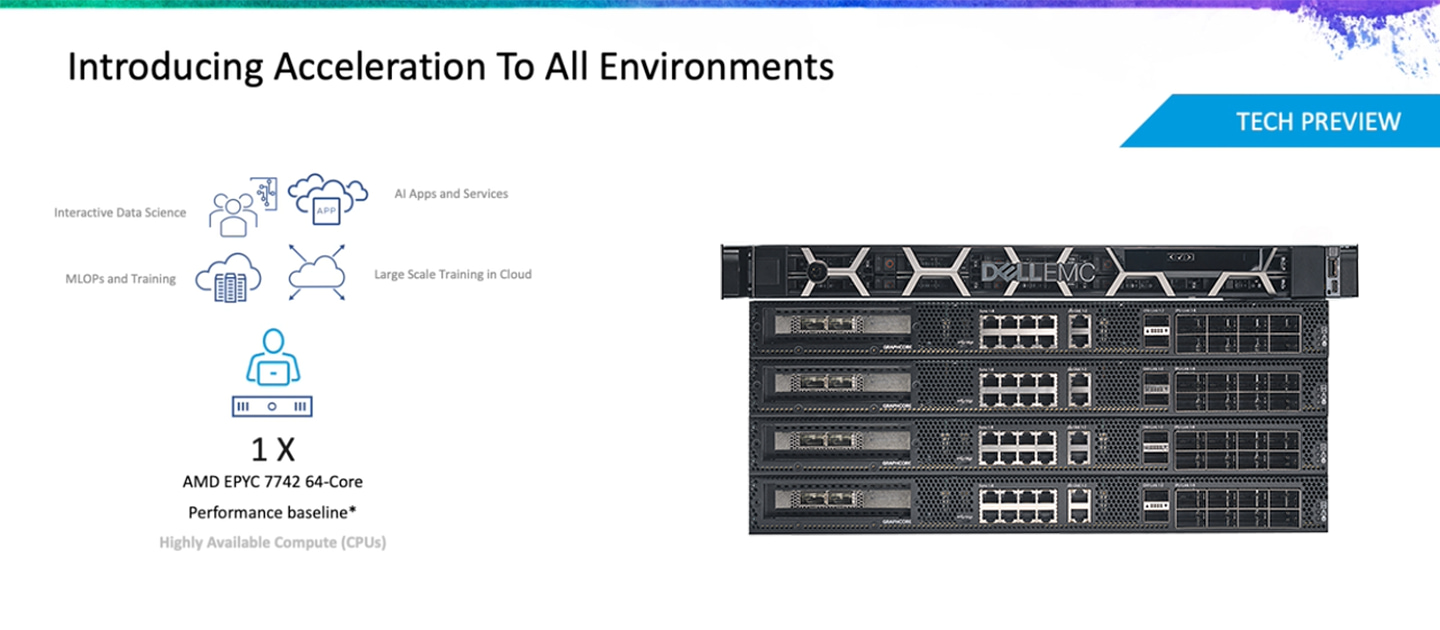

Taking a big step towards disaggregated computation optimized for AI, Project Radium enables remoting, pooling and sharing of resources on a wide range of different hardware architectures, including Graphcore IPUs and IPU-PODs.

Device virtualization and remoting capabilities are delivered across a multitude of high-performance AI accelerators without the need for explicit code changes or user intervention. Developers can fully concentrate on their models rather than hardware-specific compilers, drivers or software optimizations.

By dynamically attaching to hardware like IPU-PODs over a standard network, users will be able to leverage high-performance architectures such as the IPU to accelerate more demanding use cases at scale.

Enterprise AI made easy

Together, VMware and Graphcore are bringing Enterprise AI features within easy reach. VMware Radium allows users to leverage the unique benefits of the network disaggregated architecture of the IPU-PODs together with addressing the needs of multi-tenancy, isolation and security in the most demanding enterprise environments. Whether it is a public cloud, hybrid cloud or on-prem, VMware Radium and Graphcore IPUs will be there to offer the most cost-effective, enterprise-grade AI solutions for your applications.