For more performance results, visit our Performance Results page

Bow Pod systems let you break through barriers to unleash entirely new breakthroughs in machine intelligence with real business impact. Get ready for production with Bow Pod64 and take advantage of a new approach to operationalise your AI projects.

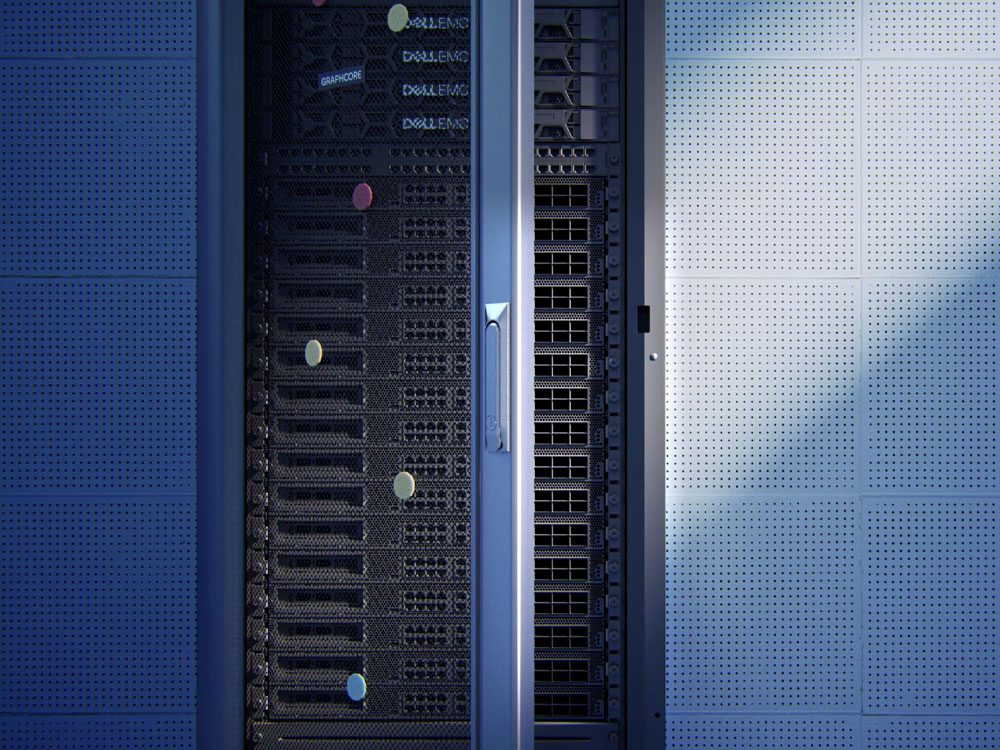

Bow Pod64 delivers ultimate flexibility to maximise all available space and power in your datacenter, no matter how it is provisioned. 22.4 petaFLOPS of AI compute for both training and inference to develop and deploy on the same powerful system.

Performance

World-class results whether you want to explore innovative models and new possibilities, faster time to train, higher throughput or performance per TCO dollar.

| Processors | 64x Bow IPUs |

| 1U blade units | 16x Bow-2000 machines |

| Memory |

57.6GB In-Processor-Memory™ Up to 4.1TB Streaming Memory™ |

| Performance | 22.4 petaFLOPS FP16.16 5.6 petaFLOPS FP32 |

| IPU Cores | 94,208 |

| Threads | 565,248 |

| Host-Link | 100 GE RoCEv2 |

| Software |

Poplar TensorFlow, PyTorch, PyTorch Lightning, Keras, Paddle Paddle, Hugging Face, ONNX, HALO OpenBMC, Redfish DTMF, IPMI over LAN, Prometheus, and Grafana Slurm, Kubernetes OpenStack, VMware ESG |

| System Weight | 450kg + Host servers and switches |

| System Dimensions | 16U + Host servers and switches |

| Host Server | Selection of approved host servers from Graphcore partners |

| Storage | Selection of approved systems from Graphcore partners |

| Thermal | Air-Cooled |