Cambrian AI Research: Graphcore’s Customer-Driven AI Software Stack

Evolving the Poplar software stack with an open source development community

A new white paper from Cambrian AI Research examines the growing momentum of the Poplar software stack and ecosystem, detailing how our customer-centric focus is driving software enhancements, supporting developers and benefitting from open-source contributions from the wider AI ecosystem.

“Developers require fast, scalable accelerators to handle the massive computational loads of larger models. But AI developers also need a robust development environment that meets them in their AI development journey," writes Analyst Karl Freund.

Cambrian AI Research: The Data Center Architecture for Graphcore Computing

Designed for large-scale parallel workloads

This research examines the Graphcore data center architecture that enables highly scalable parallel processing for Artificial Intelligence (AI) and High-Performance Computing (HPC).

The architecture encompasses efficient low-latency communications between Intelligence Processing Units (IPUs) within a node, within a rack, and across a data center with hundreds, and in the future thousands, of accelerators to handle exponentially increasing AI model complexity.

IPU-Fabric dynamically connects IPU accelerators with disaggregated servers and storage. Critically, this agile platform for parallel applications supports a comprehensive software stack to develop and optimize these workloads using open-source frameworks and Graphcore-developed libraries and development tools.

Moor Insights: Graphcore Software Stack - Built to Scale

Learn more about the Poplar software stack designed for Machine Intelligence

Software for new processor designs is critical to enabling application deployment and optimizing performance. Graphcore’s Intelligence Processing Unit (IPU) utilizes the expression of an algorithm as a directed graph, and the Poplar software stack translates models and algorithms into those graphs for execution.

This paper explores the benefits provided by Poplar software and discusses how these capabilities could speed development and deployment of applications that run on Graphcore IPUs.

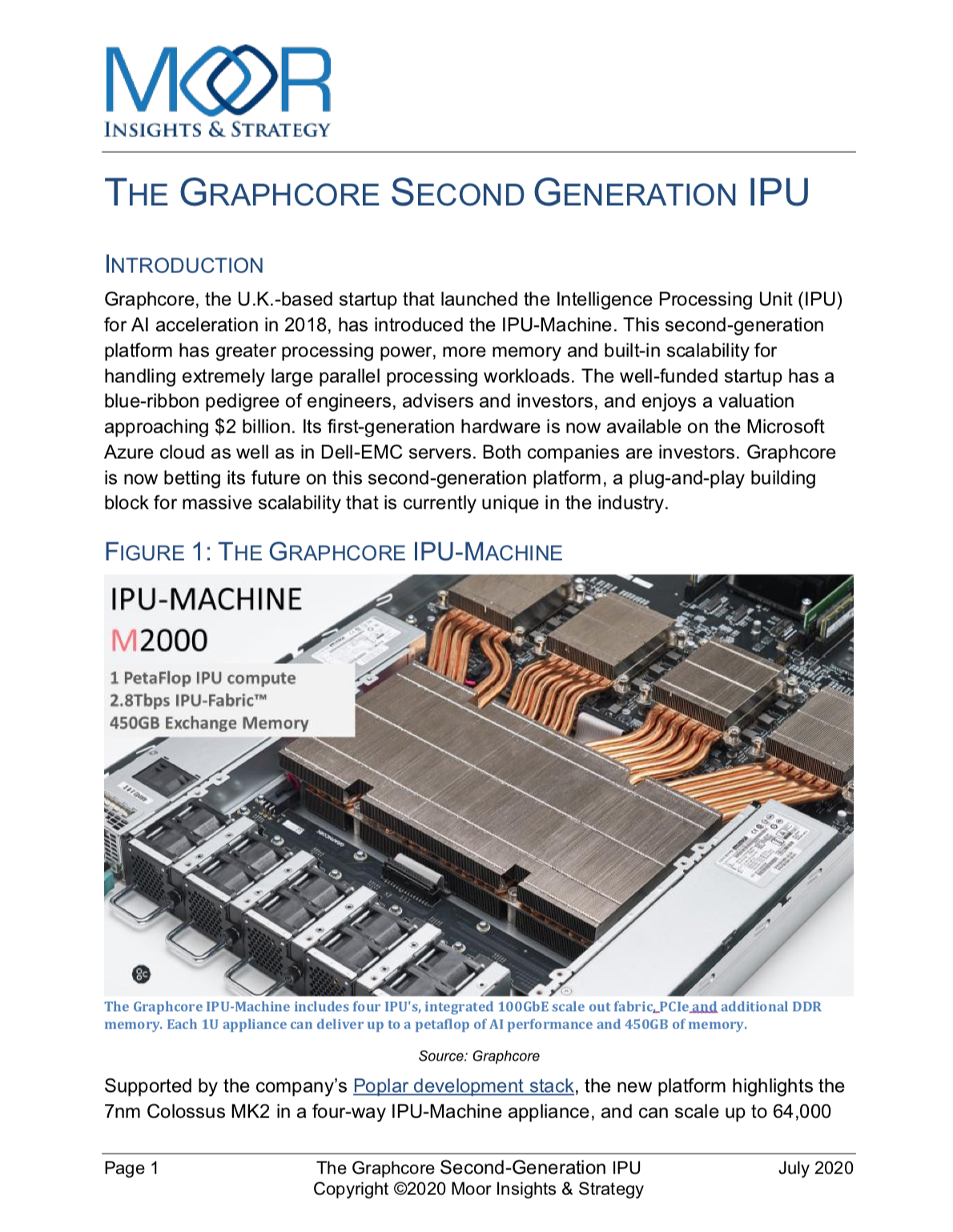

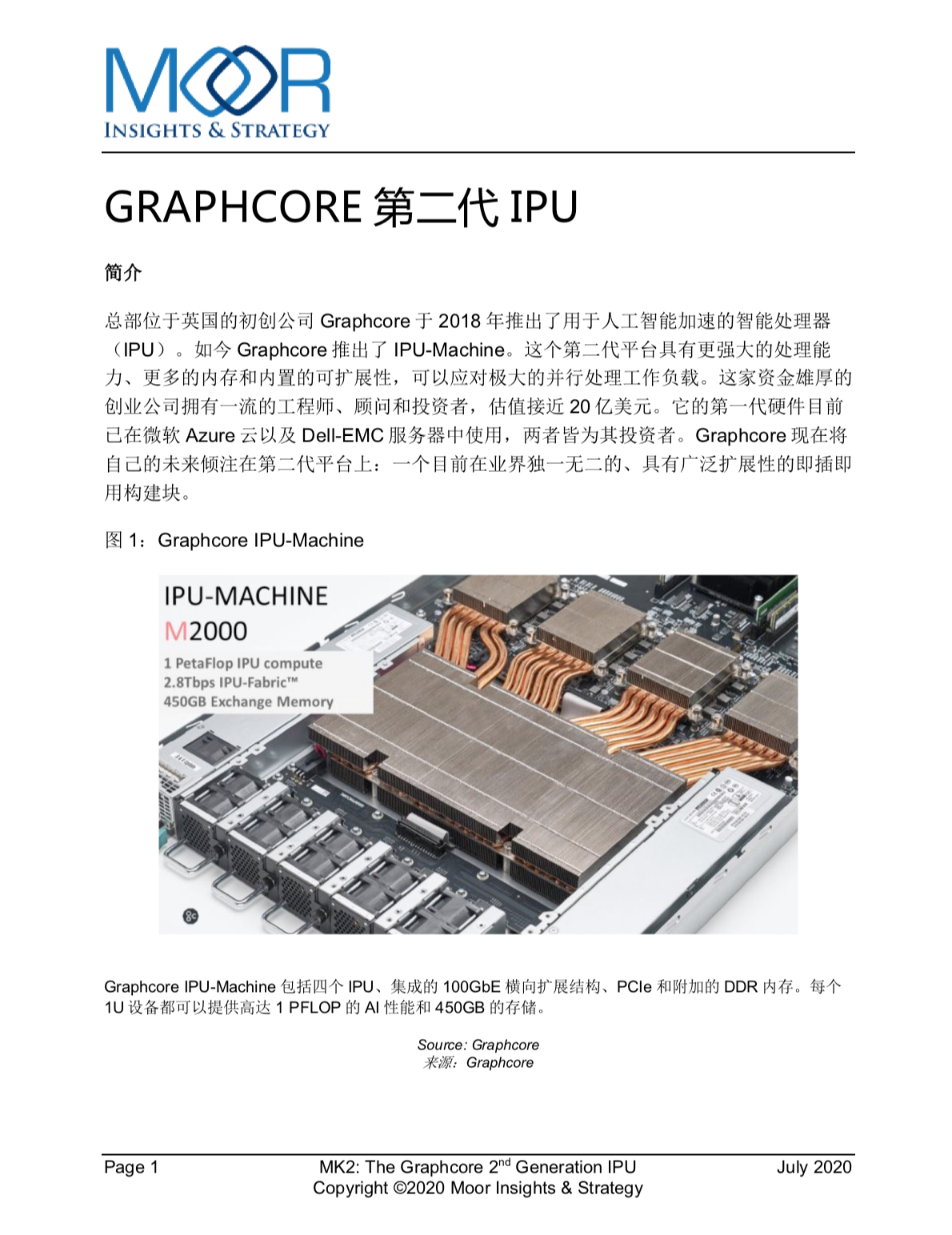

Moor Insights: MK2 - The Second Generation IPU

Learn more about Graphcore's second generation IPU systems for AI at scale

This research paper from Moor Insights & Strategy, explores Graphcore's new 7nm Colossus MK2 GC200 IPU, the IPU-M2000 and IPU-POD systems which scale to supercomputing scale for enterprise AI infrastructure.

Analyst Karl Freund describes our second-generation platform, the IPU-M2000, as having "greater processing power, more memory, and built-in scalability for handling extremely large parallel processing workloads."

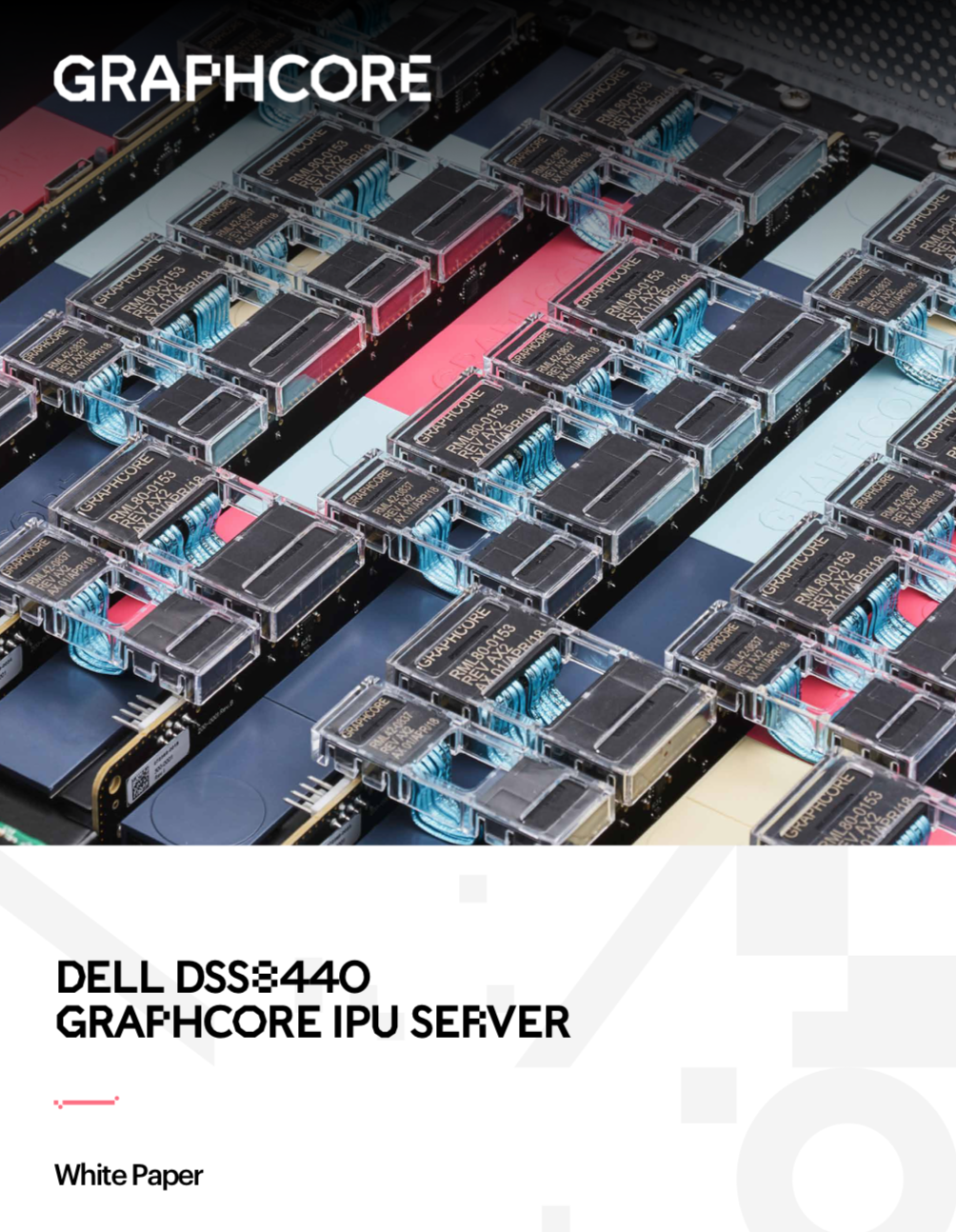

DSS8440 White Paper

Download the DSS8440 White Paper to access Machine Intelligence Insights

Today’s CPUs and GPUs are holding leading innovators back from making breakthroughs in machine intelligence. What is needed is a new type of processor that can efficiently support much more complex knowledge models for both training and inference. This is why Graphcore have created the Intelligence Processing Unit – the IPU. The IPU is built from the ground up to meet these innovators’ needs and to help them make new breakthroughs in machine intelligence.

Moor Insights: 研究报告

了解Graphcore第二代大规模IPU系统

来自Moor Insights&Strategy的这份研究报告探讨了Graphcore全新7纳米 Colossus MK2 GC200 IPU、IPU-M2000和IPU-POD系统,它们可以扩展到企业AI基础设施的超级计算规模。

分析师Karl Freund将Graphcore基于第二代IPU的IPU-M2000描述为:“具有更大的处理能力,更多的内存以及内置的可扩展性,可以处理非常大的并行处理工作负载。该公司现在将把未来押注在第二代IPU平台上 。IPU-M2000即插即用的构建块,可实现大规模的可扩展性,目前在业界是独一无二的。”

Moor Insights: Graphcore软件栈 - Built to Scale

了解为机器智能而专门设计的 Poplar软件栈

科技分析机构Moor Insights & Strategy在其5月26日发表的一篇白皮书《Graphcore的软件栈:Built to Scale》中探讨了Graphcore软件Poplar® SDK所带来的种种益处,并讨论了这些能力是如何加快在Graphcore IPU上运行的应用程序的开发和部署的。

Moor Insight & Strategy高级分析师表示:“Graphcore是我们目前已知的唯一一家将其产品扩展到囊括如此庞大的部署软件和基础架构套件的初创公司。”

그래프코어의 엔터프라이즈 AI용 2세대 IPU 시스템에 대한 자세한 내용을 확인해 보십시오

MOOR INSIGHTS AND STRATEGY 연구 조사 보고서

Moor Insights & Strategy의 이 조사 보고서는 엔터프라이즈 AI 인프라를 위해 수퍼컴퓨팅 규모로 확장되는 그래프코어의 새로운 7nm Colossus MK2 GC200 IPU IPU-M2000과 IPU-POD 시스템에 대해 다루고 있습니다.

애널리스트인 Karl Freund는 그래프코어의 2세대 플랫폼인 IPU-M2000를 "초대용량 병렬 처리 워크로드를 처리할 수 있는 보다 강력한 처리 성능, 더 큰 메모리 및 기본 내장된 확장성을 갖추고 있습니다. 그래프코어는 이제 업계 유일의 대용량 확장성을 위한 플러그 앤 플레이 구성 요소인 이 2세대 플랫폼에 자사의 미래를 걸었습니다."라고 평가했습니다.

무어인사이트앤스트레티지(Moor Insights) 백서

작성자: 칼 프로인드(Karl Freund)/패트릭 무어헤드(Patrick Moorhead)

새로운 프로세서 설계를 위한 소프트웨어는 애플리케이션 설치와 성능 최적화에 매우 중요합니다. 영국에 본사를 둔 그래프코어는 애플리케이션 가속화를 위한 실리콘 공급업체로서 소프트웨어에 상당한 비중을 두고 있습니다. 그래프코어의 IPU(지능처리장치)는 알고리즘의 표현식을 방향성 그래프(directed graph)로서 사용하며, 포플러 소프트웨어 스택은 모델과 알고리즘을 해당 그래프로 변환해 실행합니다. 이 소프트웨어는 AI 및 병렬 컴퓨팅을 위한 칩 채택을 단순화하며 이는 그래프코어의 성공에 핵심적인 역할을 수행합니다. 해당 백서는 그래프코어의 소프트웨어가 제공하는 이점들에 대해 설명하고, 핵심 기능들을 통해 IPU에서 실행되는 애플리케이션의 개발 및 설치 시간을 단축하는 방법에 대해 제시합니다.

델 DSS8440 그래프코어 IPU 서버

IPU 기술을 적용한 머신 인텔리전스에 대한 자세한 내용을 확인하세요.

기존의 CPU 및 GPU는 주요 혁신 선도 기업들이 머신 인텔리전스 분야에서 혁신을 이루는 데 장애물로 작용하고 있습니다.

따라서 학습과 추론을 위해 더욱 복잡해진 지식 모델을 효율적으로 지원할 수 있는 새로운 유형의 프로세서가 필요합니다. 이것이 바로 그래프코어가 IPU(지능 처리 장치: Intelligence Processing Unit)를 개발한 이유입니다. IPU는 혁신 선도 기업의 요구 사항을 충족하고 기업이 머신 인텔리전스 분야에서 새로운 돌파구를 모색할 수 있도록 돕기 위해 개발되었습니다.