Wired ran a great article last week about ‘AI brain scans’ – images of the computational graphs generated by Graphcore’s Poplar software framework for machine learning applications, mapped to our Intelligence Processing Unit (IPU).

Our ‘Inside an AI brain’ blog gave a high level structural overview of AlexNet with images and introduced Graphcore’s software framework, Poplar. Feedback has been positive and it’s good to be able to start sharing some of the work we have been doing. So, here’s a bit more information about Poplar and how it works.

The images you have seen previously are all generated from the internal graph structure in Poplar of a machine learning model deployed on the IPU. As we have said before, we host the whole model inside the IPU processor and Poplar is the software framework that makes this possible. Poplar itself is made up of two key components:

- The Graph Compiler

- The Graph Engine

Graphs are the paradigm that our software framework is based upon. Wikipedia describes a graph as a set of vertices or nodes or points, together with edges, arcs, or lines.

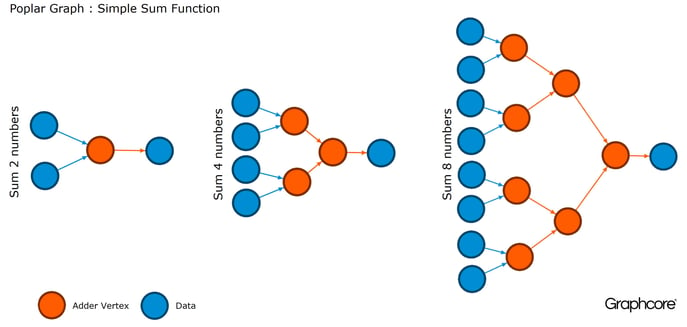

In Poplar, graphs allow you to define the process of computation, where vertices perform operations and edges describe the communication between these operations. For example, if you wanted to sum 2 numbers you could define a vertex with 2 inputs (the numbers you would like to add), some computation (the function of adding 2 numbers) and an output (the result). Usually the vertex operations are much more complex and are defined by small programs called codelets. The graph abstraction is attractive because it makes no assumptions about the structure of computation and it breaks the computation into component pieces, which a highly parallel processor such as the IPU can exploit for performance.

Poplar uses this simple abstraction to build the very large graphs you have seen as images. The programmatic generation of the graph means we can tailor it to the specific computation required to make sure we most effectively use the resources of the IPU.

This also allows us to build libraries, implemented in Poplar, which provide an external interface that is familiar. Using the simple example of the adder above, we could write a library function for adding numbers, which, if we visualized the graph from Poplar for different sized inputs, would look as follows. Notice how there is only one vertex type used repeatedly, but the connections between vertices allow more complex computation to be formed.

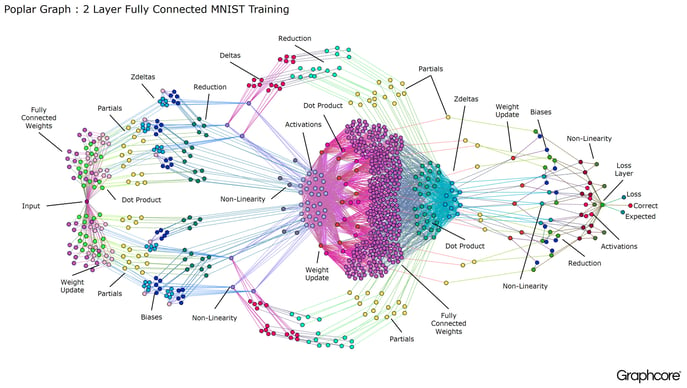

The Poplar Graph Compiler allows us to compile these graphs to the IPU graph processor efficiently. This is also how we build our graph libraries, in particular, the neural network library (POPNN). POPNN contains a highly optimized set of vertex types for a variety of primitives. This includes primitives such as convolutions, pooling and fully connected layers, which can be composed to form complex machine learning models. You have seen the output of graphs from POPNN library calls in the previous blog but let us have a look at an example, a simple fully connected network trained on MNIST.

MNIST is a simple computer vision dataset, a sort of “hello world” for machine learning. A simple network for learning this dataset helps us understand the graphs that power Poplar applications. By integrating our graph libraries with frameworks such as TensorFlow, we provide the easiest route possible to utilizing the performance of the IPU in machine learning applications.

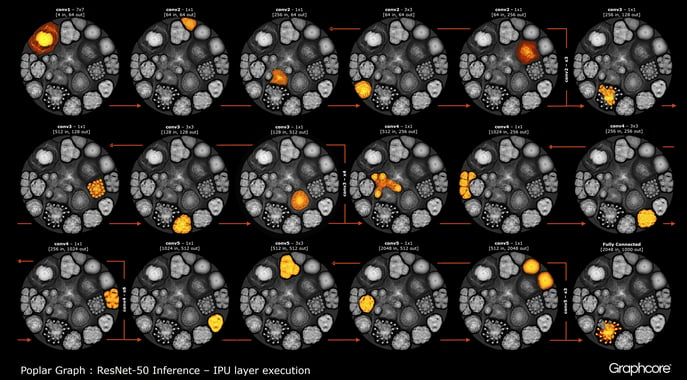

Once we have built a graph for a machine learning model using the Graph Compiler, we need to execute it. This functionality is provided by the Poplar Graph Engine. One of the images shown in the previous blog was that of ResNet-50; we can take a closer look at how this is executed by the Poplar Graph Engine.

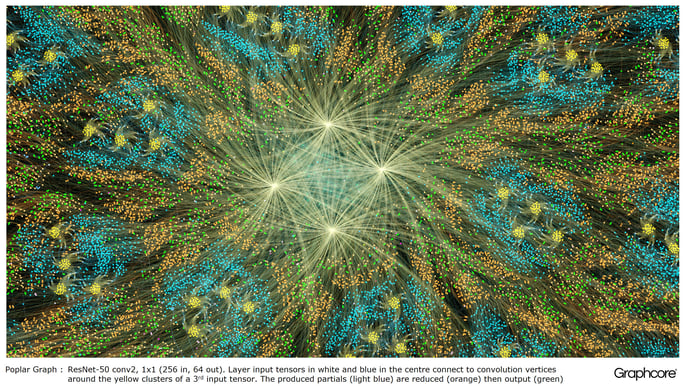

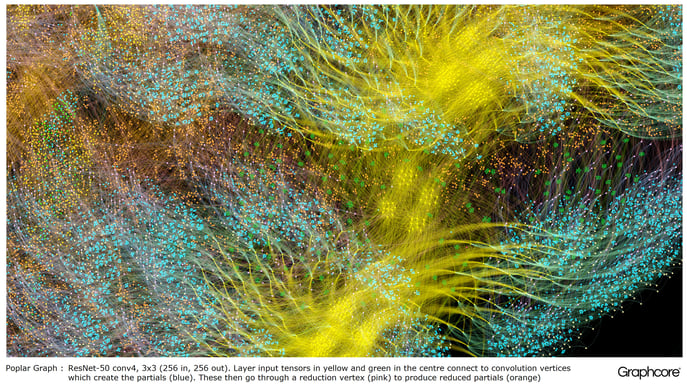

The ResNet-50 architecture allows powerful, deep networks to be assembled from repeated sections. A graph processor such as the IPU need only define these sections once and call them repeatedly. For example the conv4 layer cluster is executed six times overall but only exists in the graph once; each call uses the same code with different network weight data. There is a large amount of reuse visible in the graph. The images also demonstrate the diversity of shapes of the convolutional layers, because, as described above, each of them has a graph built to match the natural shape of the computation.

The Graph Engine creates and manages the execution of a machine learning model, using the graph built by the Poplar Graph Compiler. Once deployed, the Graph Engine provides the hooks for debug and analysis tools to connect into. It also monitors and responds to the IPU device or devices used within the application.

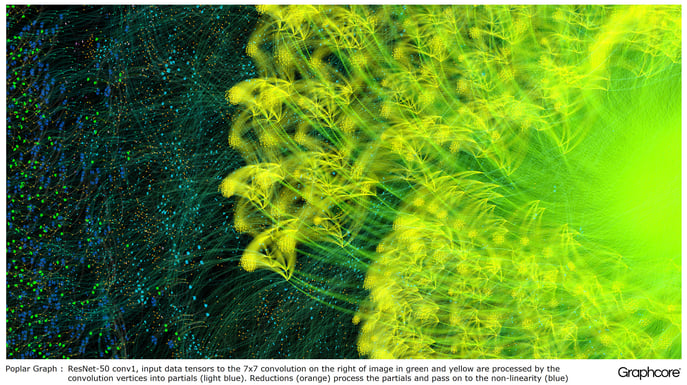

The image we provided previously of a computational graph for ResNet-50 showed the whole model. At this level it is very difficult to pick out the connections between individual vertices, so let’s zoom in. Below are a few examples of sections inside the layers of ResNet-50 seen at a much higher level of magnification.

The Poplar Graph Compiler and Graph Engine are targeted to support our revolutionary Intelligence Processing Unit. The IPU is designed specifically for building and executing computational graphs for all the different types of machine intelligence models. IPU systems can train models much faster than todays CPU and GPU processors, which were simply not designed for machine intelligence workloads.

The same IPU hardware can also be used to deploy optimized models for inference or prediction. But most importantly, the combination of Poplar and the IPU is much broader in conception than today’s favourite deep learning algorithms; it has the potential to support future waves of innovation in machine learning.

You can read a short Poplar product overview by clicking on the link below: