We are excited to announce that Graphcore now supports Alibaba Cloud’s Open Deep Learning API (ODLA), which is designed to rapidly enable heterogeneous computing and meet the growing demand for AI compute acceleration in datacentres.

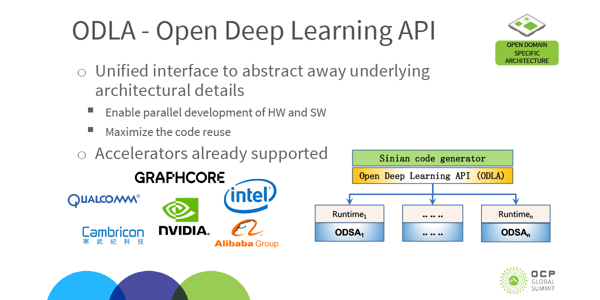

The ODLA interface standard was first announced at the 2020 OCP Global Summit

The ODLA interface standard was first announced at the 2020 OCP Global Summit

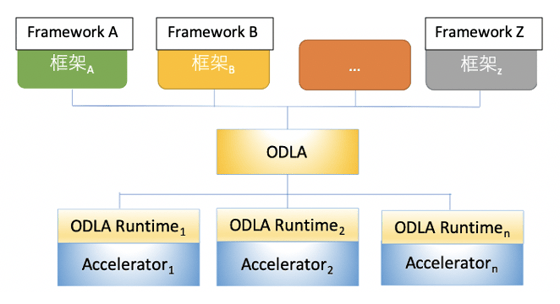

Launched at Alibaba’s recent Apsara Conference, ODLA is a unified heterogeneous hardware programming interface for accelerating deep learning. The API enables maximum efficiency in heterogeneous AI computing environments where multiple processor types are required to work together on a common platform. Graphcore’s IPU is one of a handful of processors that support Alibaba’s ODLA.

ODLA elegantly addresses two of the biggest requirements that new Graphcore users have. They want to easily integrate IPU systems into their existing datacentre, and they want to ensure that the resulting setup can be highly optimised at every level, from individual components, right up to system-wide.

New applications of machine intelligence mean that we’re asking more of our datacentres than ever before – an increased range of operation types, at a greater scale, using a wider selection of hardware. ODLA makes it straightforward to manage that complexity and extract the full potential of new technologies.

ODLA was developed to solve a long-standing fundamental challenge in heterogeneous computing - to deliver maximum performance from domain-specific chips which often require architecture-specific software optimisations.

ODLA-based heterogeneous AI hardware docking solution

At the same time, software frameworks also need to optimise the system as a whole, as if it were a homogeneous technology. That may include managing workloads across CPUs, FPGAs, ASICs and other chips.

ODLA acts as an interface or translator between the system-wide common language, and the architecture-specific optimisations, reducing the adaptation work of different hardware to focus more on performance and task optimisation.

The API enables datacentre setups that are both general purpose and high-performance, providing a unified acceleration framework for high-level applications. For application developers, ODLA reduces the need for repeated optimisations for different architectures and should lower development costs and time to market.

The main features and benefits of ODLA announced at the 2020 Apsara Conference include:

- Transparent interface layer with no loss of functionality.

- Hardware and software decoupling: Through AI-oriented multi-resolution operator abstraction, a unified interface is defined, decoupling software and hardware, and smoothly migrating services. This enables code reuse across hardware platforms improving efficiency in both development and deployment.

- Multi-modal execution mode: ODLA supports multiple execution modes including interpretative, just-in-time and ahead-of-time compiled execution, achieving compatibility with various hardware execution models.

- General-purpose AI support: ODLA supports training and inference and is designed for AI services in all scenarios including cloud, edge, and end-to-end AI services; enabled by a wide range of operator definitions and interfaces (e.g. device management, session management, execution management, event synchronisation/asynchronisation, resource query, performance monitoring).

- Excellent scalability, supporting unique attributes and custom operators of AI chip manufacturers.

At Graphcore, we believe that the IPU will be at the heart of next generation datacentres’ AI workload. We understand that our vision requires frameworks and tools – including ODLA – that enable our products to work in tandem with other best-in-class technologies.