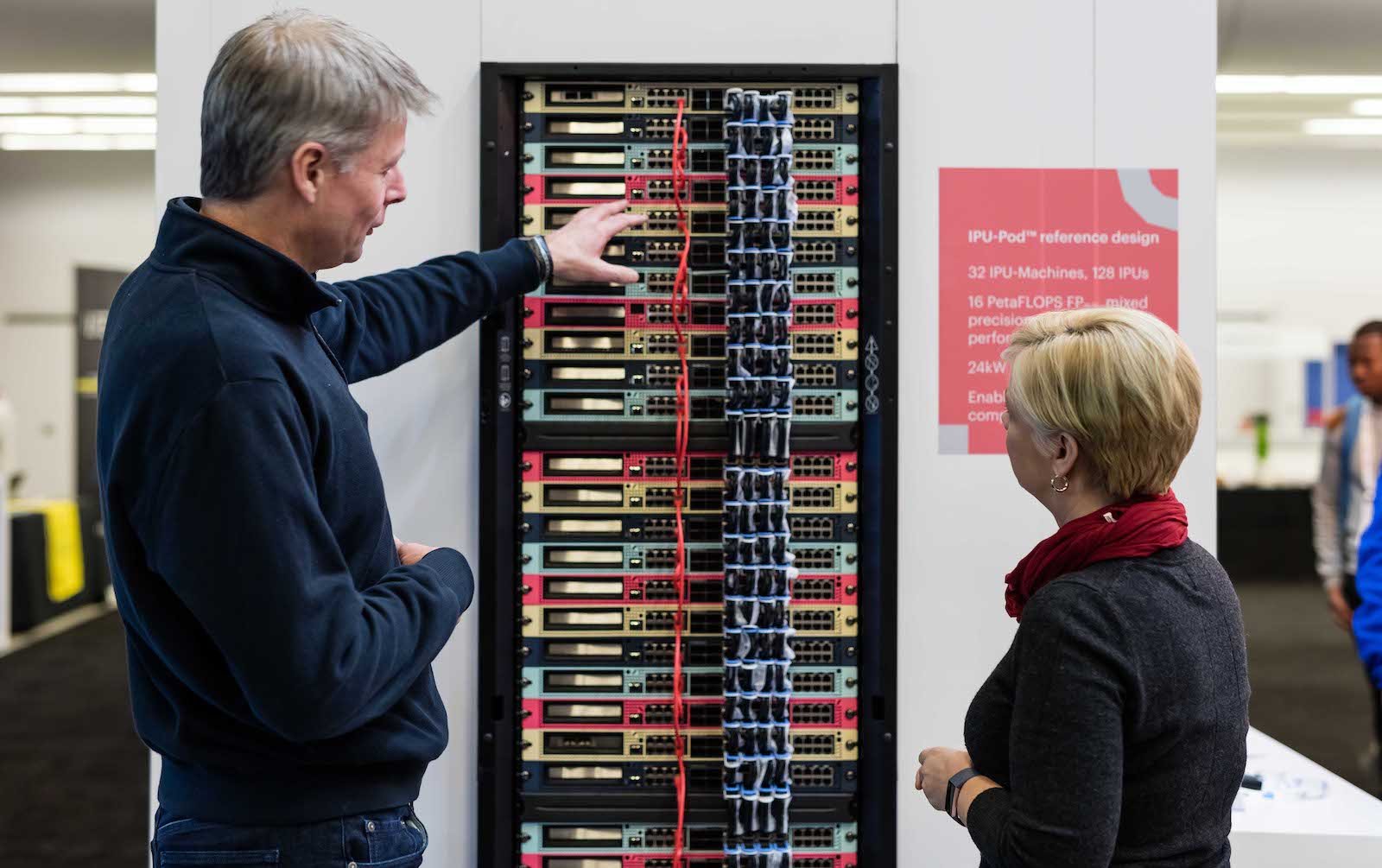

The Graphcore Colossus IPU-Processor was designed so that it could scale to deliver unprecedented levels of compute. This week, at NeurIPS, we are showing our Rackscale IPU-Pod™ reference design, which takes full advantage of the IPU’s scale-up and scale-out features, and can run massive machine intelligence training tasks or can support huge deployments with thousands of users.

To deliver the compute performance required for machine intelligence workloads, research and infrastructure engineering teams have worked hard to scale-up existing hardware to support training for today’s approaches. However, training complex deep neural networks is still taking far too long. Many of the new machine learning approaches need 100x or 1000x more compute. To achieve this level of performance requires a new system solution that can scale to the level of compute required.

To solve this problem, the Graphcore networking team came up with a design for an IPU-Pod that is completely elastic and can scale out to support the massive levels of compute needed to support next generation machine intelligence requirements.

A single 42U rack IPU-Pod delivers over 16 Petaflops of mixed precision compute and a system of 32 IPU-Pods scales to over 0.5 Exaflops of mixed precision compute. These unprecedented performance numbers enable a new level of throughput for both training and inference on the same hardware.

The Graphcore Rackscale IPU System is a fully 'open hardware’ design that allows it to be integrated with our cloud customers’ preferred building block components using industry standard interfaces and protocols, providing complete flexibility with their desired system configurations. As a result, customers will have full control over cost, performance and system management.

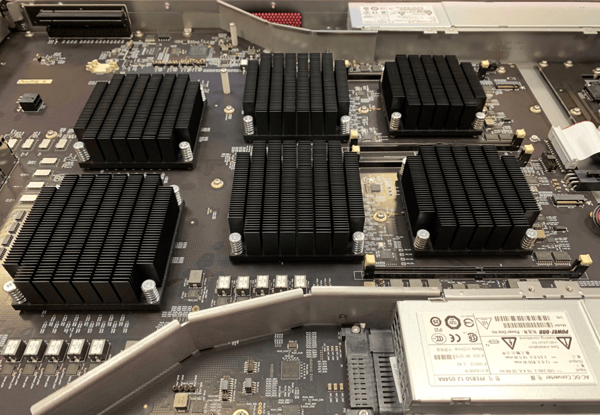

The Rackscale IPU-Pod we are showing at NeurIPS is an example configuration which consists of 32 1U IPU-Machines™, each composed of 4 Colossus GC2 IPU Processors to provide 500 TFlops of mixed precision compute, over 1.2GB of In-Processor Memory™ and an unprecedented memory bandwidth of over 200TB/s. A single IPU-Machine operates with nearly 30,000 independent IPU program threads all working in parallel on knowledge models which are held inside In-Processor Memory.

1U IPU-MACHINE™ compute blade, with four IPUs

1U IPU-MACHINE™ compute blade, with four IPUs

Rackscale IPU Processor systems are optimized for data parallel and model parallel machine intelligence workloads and support massive scale. Additional IPU-Pods can be seamlessly added through standard high-speed OSFP 400G cabling infrastructure, enabling a massive scale-up of machine intelligence compute. A single IPU-Pod can be built with up to 4096 IPU Processors delivering 0.5 ExaFlop of mixed precision IPU compute in just 32 racks.

A 32-Rack IPU-POD with a total of 4096 IPU processors, delivering 0.5 ExaFlop of mixed precision compute

Space is also available in the rack for PCIe or Ethernet connected host servers or the host servers can be connected across the datacenter network.

Rackscale IPU-Processor systems will include an innovative software layer called Virtual-IPU™ that enables IPU-Pods to become completely elastic machine intelligence compute resources for scale-up and scale-out.

The Virtual-IPU software layer integrates with the IPU Poplar® Graph Toolchain and delivers an interface to industry standard container orchestration software such as Kubernetes and VMWare. With Virtual-IPU it becomes possible to run a machine intelligence system in a virtual container on a host CPU and for this to pick up as much IPU compute as it needs either for training or inference. When it finishes, these IPUs can be released for other tasks. Various host to IPU ratios will be supported to accommodate a multitude of machine intelligence workloads with different data processing requirements.

Please stop by the Graphcore Booth No. 117 in the NeurIPS 2018 exhibition to see the IPU Rackscale system and to learn more about our IPU Processors and Poplar Graph Toolchain software. If you're not at the conference, you can watch this short video.

Our Rackscale team is hiring in Oslo, Norway. Check out our careers site if you would like to join the team.