In the University of Bristol's High-Performance Computing group, led by Prof. Simon McIntosh-Smith, we've been investigating how Graphcore’s IPUs can be used at the convergence of AI and HPC compute for scientific applications in computational fluid dynamics, electromechanics and particle simulations.

Over the past decade, machine learning has played a growing role in scientific supercomputing. To better support these power-hungry workloads, supercomputer designs are increasingly incorporating AI compute like Graphcore IPU-POD scale out systems which use lower precision 32-bit arithmetic to complement traditional HPC systems using 64-bit architectures. Although IPU systems are designed specifically for machine intelligence compute, they have tremendous potential to be used for many scientific applications, generating results faster while potentially consuming less energy.

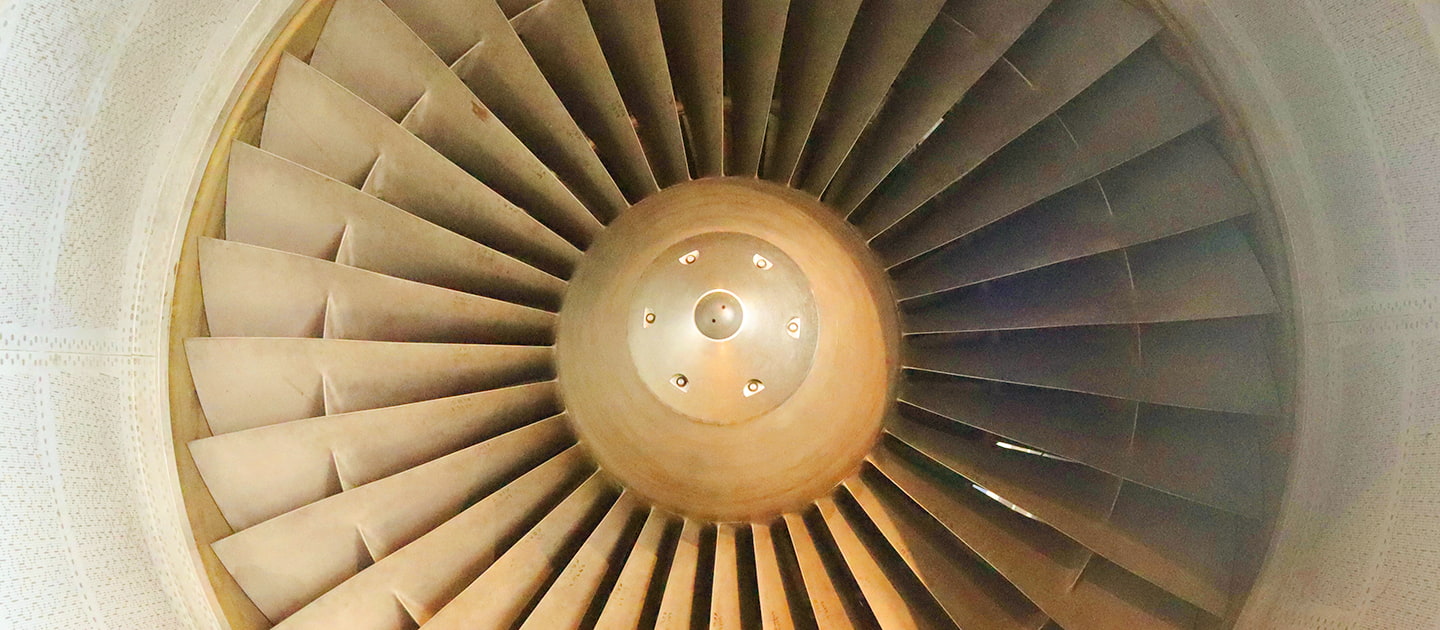

The HPC Group at the University of Bristol is a partner in the five-year ESPRC-funded ASiMoV (Advanced Simulation and Modelling of Virtual Systems) prosperity partnership, a consortium led by Rolls-Royce, along with 4 other UK universities and businesses. Together, we're developing the techniques needed to accurately simulate the physics of a complete gas-turbine engine to high accuracy: something currently well beyond today’s state-of-the-art capabilities. Bristol’s High Performance Computing group is investigating how emerging architectures and AI accelerators can be applied to this problem, and chose the IPU as a prominent example of this new breed of processor.

Scientific software is designed from the ground up to be massively parallel, allowing it to scale to tens of thousands of nodes in a supercomputer. But complex data dependencies and memory access patterns in numerical algorithms mean that application performance is often limited by memory bandwidth or communication constraints rather than the speed at which individual nodes can compute. This is where new processor designs like the IPU, with its large on-chip SRAM memories placed alongside processor cores, offer exciting opportunities to unlock future performance.

In today’s increasingly heterogeneous supercomputers, applications are designed to exploit the strengths of the different kinds of computation devices that might be present. For example, CPUs are used for low-latency general computations in high precision, and have access to large amounts DRAM memory, but accessing this memory is slow and energy intensive. GPUs are well-suited to data-parallel workflows that can make use of their single-instruction multiple-thread (SIMT) design. In contrast, the IPU has a multiple-instruction multiple-data (MIMD) design which allows running more than 100,000 independent programs at the same time. This design, along with its very high memory bandwidth, huge on-chip memory and dedicated hardware support for operations such as matrix multiplication and convolutions, means that it is attractive for some HPC tasks that currently run less optimally on CPUs and GPUs. Applications might include graph processing and efficient processing of sparse linear algebra.

IPUs for Computational Fluid Dynamics

Using Graphcore Mk1 IPUs, we started by investigating a class of HPC problems called structured grids, which underpin the solvers for partial differential equations that are used when modelling physical processes like heat transfer and fluid flow.

HPC developers need to squeeze the maximum efficiency from underlying hardware, so software is written in lower-level languages such as C, C++ or Fortran. For our work with the IPU, we used Graphcore’s Poplar™ low-level C++ Framework, and investigated how to express common “building blocks” of HPC software in Poplar, for example:

- Considering how to partition large structured grids over multiple IPUs

- How to efficiently exchange shared grid regions (“halos”) between the IPU’s tiles.

- Exploiting Poplar’s optimised primitives for parallel operations such as reductions and implementations of convolutions and matrix multiplication, which can use the underlying optimised IPU hardware

- Optimising critical code sections using Poplar’s vectorised data types (e.g. half4)

- Making use of the IPU’s native support for 16-bit half-precision floating point numbers.

Memory Bandwidth and Performance Modelling

To guide our optimisation efforts and measure application performance, we first constructed a Roofline performance model for the Mk1 IPU.

We started by implementing the BabelSTREAM benchmark for the IPU, which characterises the memory bandwidth an application can expect to achieve. The team found that typical C++ applications (written with a mix of custom C++ vertices and Poplar’s PopLibs libraries) could achieve a memory bandwidth of 7.3TB/s for single precision, and 7.5TB/s for half precision. Even higher values are possible for applications that make use of the IPU’s assembly instructions directly. These very high bandwidths are the sorts of numbers we usually see when modelling CPU caches rather than memory and are the result of the IPU’s completely on-chip SRAM design.

We could then use these memory bandwidth benchmarks as the basis to construct Roofline models that show when performance-critical sections of the application are either memory bandwidth or compute bound, helping developers choose appropriate optimisations for different parts of their applications.

Example Structured Grid applications

Using our HPC building blocks for the IPU and our performance models, we implemented two examples of structured grid applications that use the “stencil” pattern: the Gaussian Blur image filter and a 2-D Lattice Boltzmann fluid dynamics simulation.

We investigated scaling these using multiple IPUs, and found it easy to take advantage of Poplar’s support for transparently targeting 2,4,8 or 16 IPUs in a “multi-IPU” configuration. Both of our applications showed good weak scaling characteristics: adding more IPUs allowed solving larger problems in almost the same time.

For the Gaussian Blur application, a single IPU achieved a speedup of more than 5.3x compared to using 48 CPU cores (a dual socket Intel® Xeon® Platinum 8186 CPU setup running OpenCL). We also achieved a 1.5x speedup over an NVIDIA V100 GPU, with even more impressive results for multi-IPU configurations.

For the Lattice-Boltzmann simulation, a single Mk1 IPU achieved a more than 6.9x speedup compared with 48 CPU cores. This first IPU implementation compared favourably against an existing optimised implementation for the NVIDIA V100 GPU (achieving 0.7x the timings of the NVIDIA V100, and we think there is potential for considerable further optimisation, according to our Roofline performance models).

Future research

Encouraged by this early success using the IPU for structured grid problems, we've been applying the IPU to more complex "unstructured grid" HPC applications that are used in the combustion, aerodynamics and electromechanics simulations that we're tackling as part of the ASiMoV partnership. Since unstructured grids use a graph-like representation of the connections between different nodes in a mesh, memory accesses are much more complicated than in structured grid applications. These applications can potentially benefit even more from the IPU's high-bandwidth and low-latency memory design.

We’ve also been investigating how the IPU can be used for particle simulations. In ASiMoV, particle simulations are an important part of the understanding how efficiently an engine design can make use of fuel during combustion. But particle simulations are also an important category of HPC application, spanning domains from drug research (biomolecular simulations) to astrophysics (simulating the effect of forces like gravity on stars in a galaxy). Our current research explores ways to express common patterns in particle simulations using Poplar’s computational graph paradigm with compiled communication.

Additionally, we have been using IPUs in large “converged AI-HPC” simulations that combine machine learning approaches with traditional simulation techniques. We’re investigating using deep neural networks to accelerate linear solvers, such as for predicting better interpolation operators for use with the Algebraic Multigrid Method, and we’re starting to look at “Physics-informed Machine Learning” models that use techniques like super-resolution that produce accurate fine-resolution results from coarse-resolution simulations.

So far, our research has been carried out on Graphcore Mk1 IPUs using a Dell DSS8440 IPU Server. However, Graphcore’s recent announcements of larger IPU-POD systems using Mk2 IPU technology, along with the continuous rapid improvement in the IPU’s tooling, make the role of the IPU in HPC even more compelling.

Read the paper

Accessing IPUs

For researchers based in Europe like Professor Simon McIntosh-Smith who need to process their data safely within the EU or EEA, the smartest way to access IPUs is through G-Core’s IPU Cloud.

With locations in Luxembourg and the Netherlands, G-Core Labs provide IPU instances fast and securely on demand through a pay-as-you-go model with no upfront commitment. This AI IPU cloud infrastructure reduces costs on hardware and maintenance while providing the flexibility of multitenancy and integration with cloud services.

The G-Core AI IPU cloud allows researchers to:

- Get results quickly with state-of-the-art IPU processors specifically designed for machine learning acceleration

- Process large-scale data with cloud AI infrastructure suitable for complex calculations with a wide range of variables

- Use AI for research projects to reveal patterns, increase the speed and scale of data analysis, and form new hypotheses

Learn more about the G-Core IPU Cloud