Training efficiency will be a significant focus for Graphcore Research in 2021, amid machine intelligence's drive towards ever-larger deep neural networks.

Large, over-parametrised models continue to yield improved training and generalisation performance. Indeed, for many domains larger models are more sample-efficient - implying that additional compute budget should be spent on training larger models rather than training for more iterations (Kaplan et al., 2020; Henighan et al., 2020).

Finding training efficiencies at scale enables further improvements in task performance, while reducing computation cost and power consumption.

Throughout 2020, we have made progress on efficiency-related research areas including arithmetic efficiency, memory efficient training, and effective implementation of distributed training. We have also advanced our research on probabilistic modelling and made significant progress on efficient deep architectures for computer vision and language.

In this blog, we will look at research directions for the coming year, as they relate to training efficiency. We'll also consider new opportunities for parallel training, including our work on Random Bases that was presented at NeurIPS 2020 (Gressmann et al., 2020), as well as research on Local Parallelism that we carried out in collaboration with UC Berkeley and Google Research (Laskin, Metz et al., 2020).

Optimisation Of Stochastic Learning

Addressing techniques for reduced-memory implementation during deep neural network training will also be a priority. Effective learning will also heavily depend on the development of new algorithms and new normalisation techniques for stochastic optimisation, which enable training stability and the generalisation of small batch training.

New Efficient Models For Deep Learning And Graph Networks

For both unsupervised/self-supervised pre-training and supervised fine-tuning to downstream tasks, it will be critical to improve the training performance and computational efficiency of deep models by designing new processing functions and building blocks for different applications.

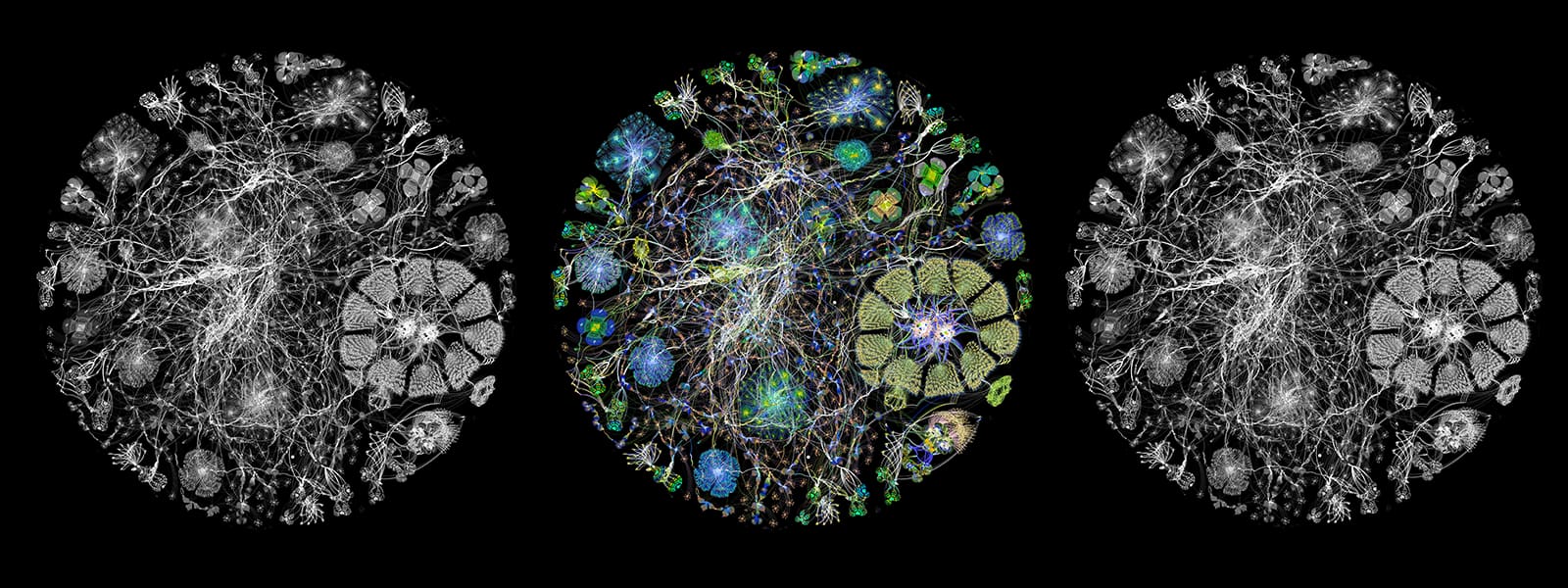

In our research, we will continue to consider fundamental applications such as computer vision and natural language understanding, while also targeting applications based on graph networks, including genomics and recommender systems.

Sparse Training

Sparse training allows AI practitioners to reduce the compute footprint and power consumption of large over-parametrised models, with the goal of enabling training of larger models than is currently feasible.

Pruning a dense model at the end of training or progressively increasing the amount of sparsity during training allows researchers to reduce the size of the model that can be used for inference. However, these approaches are still limited by the compute and memory requirements of the full model size, at least throughout part of the training duration.

Reduced computation and memory requirements are obtained by methods based on deep network pruning at initialisation, followed by training the resulting pruned subnetwork (Hayou et al., 2020). However, static sparse training, which maintains a fixed sparsity pattern during training, generally corresponds to inferior task performance.

Improved task performance can be obtained by dynamic sparse training, which explores the high-dimensional parameter space of large models by periodically changing the sparsity pattern during training, thereby maintaining the reduced computation cost and power consumption associated with the sparse subnetwork (Evci et al., 2019; Jayakumar et al., 2020).

The effective use of dynamic sparse training, with very high sparsity for both the forward and backward pass, will grant innovators the opportunity to train over-parametrised deep networks that are larger than the largest models that can be trained today (Jayakumar et al., 2020).

New Directions For Parallel Training

To reduce the time required to train large over-parametrised models, it is essential to investigate optimisation algorithms for large-scale distributed training, relying on efficient implementation over a large number of processors.

Faster training is conventionally obtained by data parallelism over a number of model replicas, each processing a portion of the data of a minibatch of the stochastic optimisation algorithm. Data parallel training helps to improve throughput by increasing the batch size. However, after an initial region where training time scales with the batch size and number of processors, by further increasing the batch size a saturation region is reached, where no further speedup from increased parallelism can be achieved (Shallue et al., 2018).

With increased model size for data parallelism, each model replica can in turn be implemented over multiple processors based on pipeline parallelism, where the layers of each replica are divided into pipeline stages. For large models, the layers of each stage can be further divided over multiple processors through basic model parallelism. While pipeline parallelism improves throughput, the speedup resulting from an increased number of pipeline stages is obtained by an increase of batch size. Therefore, for the largest batch sizes that still allow training, the use of pipeline parallelism reduces the fraction of the overall batch size that is available for data parallelism (Huang et al., 2018).

We are considering new approaches for parallel processing which can be employed for efficient distributed training of large models.

Random Bases

The cost of training over-parametrised models can be reduced by exploring the large parameter space on a small number of dimensions. This approach has been studied by restricting gradient descent over a small subset of random directions, randomly selecting a low-dimensional weight subspace that is then kept fixed throughout training (Li et al., 2018).

Improved learning compared to previous work is obtained by training in low dimensional Random Bases, exploring the parameter space by re-drawing the random directions during training, as proposed in our recent work (Gressmann et al., 2020).

Training over random subspaces can be accelerated further by parallelisation over multiple processors, with different workers computing gradients for different random projections. With this implementation, gradients can be exchanged by only communicating the low-dimensional gradient vector and the random seed of the projection. This results in nearly linear speedup of training with an increasing number of processors.

Local Parallelism

Sequential backward processing and updating of the parameters of successive layers during training (backward locking) both significantly hinder parallel training based on backpropagation. Local Parallelism can resolve these challenges by separately updating different blocks of the model based on a local objective. Local optimisation can be implemented by supervised learning or self-supervised representation learning, based on greedy local updates (Belilovsky et al., 2019; Lowe et al., 2019) or overlapping local updates (Xiong et al., 2020).

The use of local parallelism enables improved throughput, scaling processing over parallel workers as with pipeline parallelism, without needing to simultaneously increase the minibatch size, which can then be fully utilised for data parallelism. Local parallelism has been shown to be particularly effective in the high-compute regime, as discussed in our recent collaboration with UC Berkeley and Google Research (Laskin, Metz et al., 2020).

Multi-Model Training

A direct and attractive way to scale training over multiple workers is to train an ensemble of deep networks, instead of a single larger network. Compared to data-parallel training, the use of Deep Ensembles (Kondratyuk et al., 2020; Lobacheva et al., 2020) eliminates the need for any communication between model replicas. Deep ensembling has been shown to produce higher accuracy for the same computational cost, and is more efficient than training larger models (Kondratyuk et al., 2020). Moreover, ensembling enables the exploration of different modes of the non-convex optimisation landscape, and can provide well-calibrated estimates of predictive uncertainty.

Deep ensembles can also be seen as a practical mechanism for approximate Bayesian marginalisation, or Bayesian model average (Wilson & Izmailov, 2020).

An alternative effective approach for training multiple deep models in place of a single larger model is given by Codistillation (Zhang et al., 2017; Anil et al., 2018; Sodhani et al., 2020). The aim of codistillation is to train multiple networks to learn the same input-output mapping by periodically sharing their respective predictions. The method has been shown to be tolerant to asynchronous execution using stale predictions from other models. Compared to ensembling, where the individual models’ predictions must be averaged after training, in codistillation there is a single phase where all the models are trained to make the same predictions.

Conditional Sparse Computation

Conditional computation for deep learning can be implemented as a deep mixture of experts, with a sparse gating mechanism that activates only certain compute blocks of the overall network based on the input (Shazeer et al., 2017; Lepikhin et al., 2020).

This approach helps to significantly increase the overall size of the model that can be trained for a fixed computation cost, relying on exponential combinations of processing blocks within a single model (Wang et al., 2018).

During training, through the gated selection of different parts of the overall large network, the model learns the association between compute blocks and specific inputs or tasks with reduced computation load and low memory bandwidth. This approach would then make it possible to solve downstream tasks by dynamically selecting and executing different parts of the network based on the input.

The increased size of the entire trained sparsely gated model has been shown to correspond to improved task performance, with computational cost corresponding to a relatively small percentage of the total number of parameters.

AI Research In 2021

Looking ahead to the next crucial stages of AI research, it will be exciting to explore new groundbreaking approaches for both fundamental deep learning applications such as image processing and natural language processing, as well as domains based on graph networks including protein engineering, drug discovery and recommender systems.

The success of these innovative approaches will hinge on the increased compute power and flexibility of new processors like the Graphcore IPU, which is capable of enabling radically new directions for effective machine learning at scale.