We are pleased to announce the release of Poplar SDK 3.1 which is now available for download from Graphcore’s software download portal and Docker Hub.

Poplar SDK 3.1 highlights

A brief summary of the Poplar SDK 3.1 release is provided below; for a more complete list of updates, please see the release notes.

PyTorch 1.13 Support

Poplar SDK 3.1 delivers support for PyTorch 1.13, enabling our customers to use the latest stable release of PyTorch (and related libraries) on the IPU. Installing the PopTorch wheel from the Poplar SDK will automatically install the correct version of PyTorch.

Automatic loss scaling for TensorFlow 2

In our last release (Poplar SDK 3.0), Graphcore delivered capability for automatic loss scaling (ALS) in preview for PyTorch and PopART. Now with Poplar SDK 3.1 we are providing enhanced framework coverage with support for ALS in TensorFlow 2. More specifically, IPU-specific ALS optimizer classes have been added to the IPU Keras package. This functionality is delivered as an experimental release to allow customers to use it and provide feedback as we continue to refine its capabilities.

As a reminder, Graphcore ALS uses a unique histogram-based loss scaling algorithm to reliably and easily improve stability when training large models in mixed precision. This yields a combination of efficiency, ease of use, and remarkable stability surpassing all other loss scaling methods.

While the approach behind Graphcore ALS is technically accelerator-agnostic and should benefit anyone training large models in mixed precision, its origin lies in our unique experiences developing applications for IPUs.

To learn more about Graphcore ALS, visit our dedicated blog post.

Improved support for sparse computation

We have enriched the popsparse library in PopLibs by adding a sparse matrix multiply operation where the sparse operand has a fixed sparsity pattern at compile time.

Like the existing dynamic sparse operation, this supports element sparsity as well as block sparsity but with lower memory requirements and higher FLOP utilisation owing to the fixed sparsity pattern.

Our partners Aleph Alpha used it to demonstrate a sparsified variant of their commercial Luminous chatbot at SC22 in Texas.

Enhancements for PopVision System Analyser

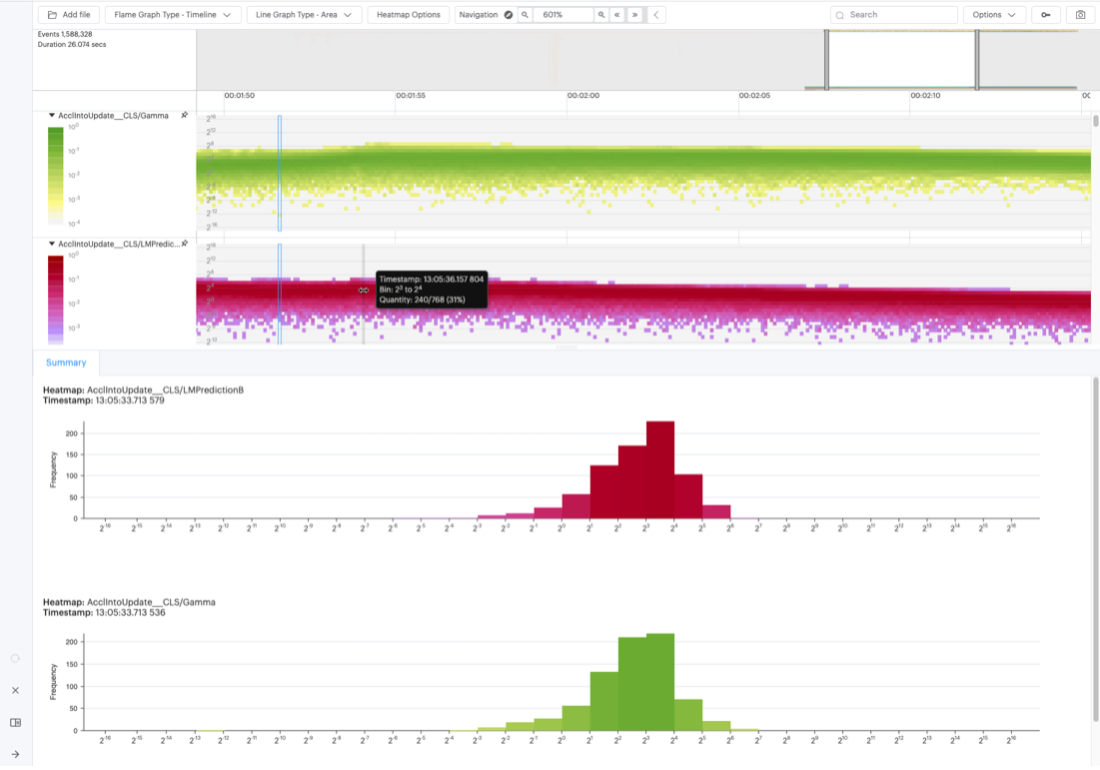

The PopVision System Analyser can now display heatmaps and histograms of selected tensor data, which can be exported from your Poplar program to a PVTI file, allowing you to analyse the numerical stability of your model.

Tensor data is collected into a time-series of histogram bins, with each bin’s occupancy plotted as a coloured bar on the System Analyser trace at the moment it was sampled. Clicking on a tensor value within the heatmap displays a histogram of the tensor samples at that point in time.

System Analyser chart showing two heatmaps of tensors, and the associated histograms at a moment in time.

Operating system support

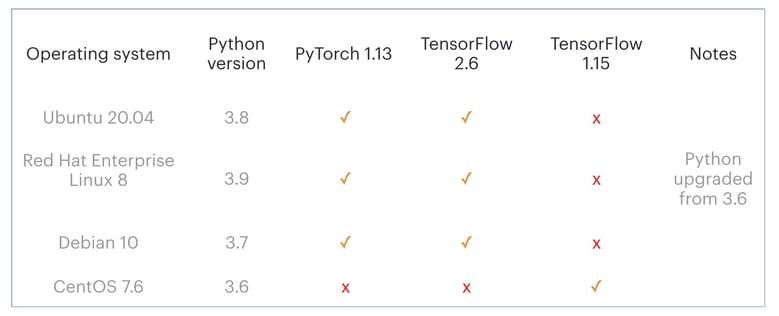

We have made some changes to the set of operating systems for which the Poplar SDK is built, primarily to enable support for PyTorch 1.13 which requires Python 3.7 or higher.

Full details on operating system support are in the release notes, with the main changes summarised in the overview section. PyTorch and TensorFlow support in Poplar SDK 3.1 is summarised in the table below.

Graphcore Model Garden additions

Graphcore’s Model Garden—a repository of IPU-ready ML applications including computer vision, NLP, graph neural networks and more—is a stand-out resource that has proved popular with developers looking to easily browse, filter and access reproducible code.

As always, we have continued to update the content of our Model Garden and associated GitHub repositories. Since the release of Poplar SDK 3.0 in September, a number of new models have been added into our model garden, as summarised below:

The popular latent diffusion model for generative AI with support for Text-to-Image, Image-to-Image and inpainting.

A hybrid GNN/Transformer for training Molecular Property Prediction using IPUs on the PCQM4Mv2 dataset. Winner of the Open Graph Benchmark Large-Scale Challenge (OGB-LSC), PCQM4Mv2 - Predicting a quantum property of molecular graphs category. Run on Paperspace: inference / training.

Sharded knowledge graph embedding (KGE) for link-prediction training on IPUs using the WikiKG90Mv2 dataset. Winner of the Open Graph Benchmark Large-Scale Challenge (OGB-LSC), WikiKG90Mv2 - Predicting missing facts in a knowledge graph category.

Sharded knowledge graph embedding (KGE) for link-prediction training on IPUs, adopting the same approach used for the OGB-LSC.

A small, efficient, fast and light Transformer model based on the BERT architecture.

Temporal Graph Networks is a dynamic GNN model for training on the IPU. This is now available on the PyTorch framework.

Benchmark performance results for many models in our Model Garden across multiple frameworks and multiple platforms will shortly be updated for SDK 3.1 and published on the Performance Results page of our website.