In recent years, there has been a significant rise in the use of big data and machine intelligence to support urban safety, city governance and other real-world issues. This implementation of YOLO (You Only Look Once) for object detection, part of Sensoro's new ESG solution, targets the algorithm design to environmental and sustainability issues, including ecological ecosystem protection, citizen safety and environmental health, animal welfare, and climate emergency response.

Object recognition plays an indispensable role in the core algorithm of this implementation and can be used to detect suspects in illegal river fishing cases, for example. Most use cases require front-end object detection results.

Deep learning based object detection tasks are fundamental to many computer vision applications and have advanced significantly in recent years. Since the publication of R-CNN, a series of algorithms have made remarkable progress in task precision, efficiency and accuracy. Among them, YOLO, launched in 2015, has been widely adopted thanks to its high efficiency. Iterating rapidly in its development, YOLO has since become a benchmark for balancing efficiency and accuracy. With the support of a large community, pre-trained models with different numbers of parameters have been open sourced to provide users with a choice in the trade-off between accuracy and efficiency, making it hugely popular for commercial implementations.

Flexible and rapid deployment with Graphcore's underlying technology

When running cutting-edge deep learning algorithms, efficient hardware and supporting software optimization play a vital role now more than ever. Graphcore's IPU-M2000 is a dedicated platform for machine intelligence, providing 260GB of Exchange Memory and up to 1 PetaFlops computing power.

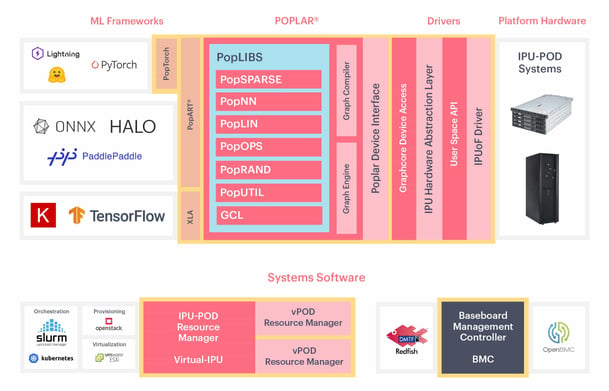

The Poplar SDK has been designed concurrently with the hardware to harness the full capacity of the IPU. The Poplar software stack gives developers access to a large number of operators which are highly optimized for IPU architecture. Poplar contains PopART (Poplar Advanced Runtime) and XLA (Accelerated Linear Algebra) which provide developers with a convenient and familiar interface to popular deep learning frameworks that can run on IPU systems.

Figure 1: Graphcore Poplar Software Ecosystem

ONNX (Open Neural Network Exchange) is one of the most popular open-source AI standard formats, supporting the rapid implementation of deep learning models by enabling interoperability among different frameworks. Thanks to PopART, ONNX models can easily be run on IPU systems. Beyond object detection, Sensoro’s other ESG solution deep learning models can also be easily run using this framework.

End-to-end Design

Apart from model inference, we still need to perform image pre-processing and non-max suppression on the inference result for the YOLO algorithm. In terms of choosing a specific model, we considered the accuracy requirements of the task and selected YOLOv3 with an input size of 416x416 and 65M parameters.

Figure 2: End-to-End YOLO Detection Task Flow

Figure 2 shows the end-to-end YOLO task which includes five main stages such as raw input data loading, image-preprocessing, grouping set of images into batch, object detection model inference, and finally post-processing of inference results. From the 5 stages, the pink boxes correspond to the stages performed by the CPU, while the yellow box is performed by the IPU computing unit.

In the entire, end-to-end workflow, the pre-processing and post-processing stages are computationally intensive tasks, so parallel operations are used to improve efficiency. The inference section uses the YOLO object detection model with a batch size of 4.

In the end-to-end load test, we used raw images with 1920 x 1080 resolution to simulate the default value of Sensoro smart cameras.

Experiments

Experiments have shown that the IPU-M2000-based test platform achieved a throughput of 660 images per second in the extreme reasoning test using simulated data (and without considering the bottleneck caused by pre-processing and post-processing).

In the end-to-end load test, we used AMD EPYC 7742 clocked at 2.3GHz, 1920 x 1080 resolution JPEG format pictures to simulate the default value of the SENSORO smart camera, and Apache Benchmark as a tool to send 100,000 requests. The throughput of QPS 450 was obtained with an average delay of 250ms.

Summary of Results

In this experiment, we have achieved excellent end-to-end YOLO inference results. In many use cases where various types of detection algorithms are implemented, we can provide developers with cost-effective compute. In subsequent iterations, we will continue to narrow the gap between end-to-end efficiency and IPU inference performance. Moreover, time to train can be reduced using IPU compute to improve the overall efficiency by designing the corresponding operator in Poplar.