The gap between AI innovation in research labs and widespread commercial adoption has probably never been shorter. Transformer models, first suggested in 2017, today form the basis of multiple billion-dollar startups spanning copywriting, translation, chatbots and beyond.

But while large language models and diffusion image generation have captured the public imagination, one of the most eagerly anticipated developments in terms of commercial potential has been Graph Neural Networks or GNNs - described by Meta AI chief Yann LeCun as “a major conceptual advance” in AI.

GNNs allow artificial intelligence practitioners to work with subjects that do not have, or don’t lend themselves to, being described by regular structures. Examples include the composition of molecules, organisation of social networks, and the movement of people and vehicles within cities.

Each of these areas – and many more – are associated with complicated, high-value applications, such as drug discovery, medical imaging, recommendation of products and services, logistics.

One reason that GNNs have taken a little longer to reach maturity than other areas of artificial intelligence is because the computation behind them has proved problematic for legacy processor architectures - principally CPUs and GPUs.

Over the past year, however, huge progress has been made using Graphcore Intelligence Processing Units (IPUs), thanks to a number of architectural characteristics that make them extremely capable at running GNN workloads, chief among these being:

- The gather-scatter process of information exchange between nodes is essentially a massive communication operation, moving around many small pieces of data. Graphcore’s large on-chip SRAM allows the IPU to conduct such operations much faster than other processor types.

- The ability to handle smaller matrix multiplications that are common in Graph ML applications such as drug discovery, but harder to parallelise on GPUs which favour large matmuls. The IPU excels at such computations, thanks to its ability to run truly independent operations across each of its 1,472 processor cores.

So impressive are IPUs at running GNNs that Dominique Beaini - Research Team Lead at drug-discovery company Valence Discovery - said he was “shocked to see the speed improvements over traditional methods”.

Getting the GNN advantage - today

The commercial use of Graph Neural Networks spans early stage, AI-centric startups that see the possibility of disrupting entire industries, to longstanding businesses, keen to maintain a competitive advantage.

As well as demonstrating outstanding performance on GNNs, Graphcore is at the forefront of offering deployment-ready models – both for training and inference.

Many of these are available to run in Paperspace Gradient Notebooks – allowing developers to get hands-on experience with GNNs on Graphcore IPUs. For new users, Paperspace offers a six-hour free trial of IPUs, with cost-effective paid tiers for those who want to take their work further.

Graphcore is already working with a wide range of commercial customers and AI research organisations to demonstrate the power of GNNs running on IPUs.

Below is a summary of some of the GNN innovations and deployments already underway.

Drug discovery and Knowledge Graph Completion

The growing popularity of Graph Neural Networks has naturally led to the emergence of forums where modelling techniques and compute platforms can be comparatively evaluated.

One of the most highly regarded is the annual NeurIPS Open Graph Benchmark Large Scale Challenge. Two of the OGB-LSC challenges test prediction of molecular properties and knowledge graph completion.

In 2022, Graphcore won both categories – on our debut submission - beating competition from Microsoft, NVIDIA, Baidu, Tencent and others.

🏆 1st Place: Predicting a quantum property of molecular graphs 🏆

PCQM4Mv2 defines a molecular property prediction problem that involves building a Graph Neural Network to predict the HOMO-LUMO energy gap (a quantum chemistry property) given a dataset of 3.4 million labelled molecules.

This kind of graph prediction problem crops up in many scientific areas such as drug discovery, computational chemistry and materials science, but can be painfully slow to run using conventional methods and can even require costly lab-experiments.

Graphcore partnered with Valence Discovery, leaders in molecular machine learning for drug discovery, and Mila (Quebec Artificial Intelligence Institute) to build the GPS++ submission. The combination of industry-specific knowledge, research expertise and IPU compute ensured that Graphcore and partners were able to deliver a category-leading entry.

Read more about GPS++ in our technical report.

Try GPS++ for yourself on Paperspace (Training) (Inference)

🏆 1st Place: Predicting missing facts in a knowledge graph 🏆

WikiKG90Mv2 is a dataset extracted from Wikidata, the knowledge graph used to power Wikipedia. In many instances, the relationship information between entities is incomplete. Knowledge graph completion is the process of inferring those connections.

Standard techniques for training knowledge graph completion models struggle to cope with dataset scale, as the number of trainable parameters grows with the number of entities in the database.

In developing Graphcore’s distributed training scheme, called BESS (Balanced Entity Sampling and Sharing, researchers made use of the IPU’s large in-processor memory and high memory bandwidth to train hundreds of models to convergence, allowing them to optimise difference scoring and loss function combinations.

Read more about our entry and the use of BESS in our Knowledge Graph deep-dive.

Try WikiKG90Mv2 for yourself on Paperspace: (Training) (Build entity mapping database)

Predicting molecular structure

Pacific Northwest National Laboratory is part of the US Department of Energy’s network of research facilities, working across a range of fields, including chemistry, earth and life sciences and sustainable energy.

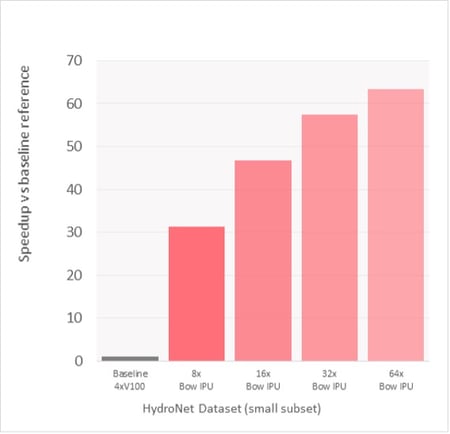

In collaboration with a team from Graphcore, scientists at PNNL looked at the use of the SchNet GNN for predicting how a particular molecule will behave (or what use it might be put to) based on the structure of its constituent atoms. In this case, the model was trained on the HydroNet dataset of water molecule structures – however, the same technique could theoretically be put to other uses, including drug discovery.

SchNet has proven itself capable of handling the notoriously difficult task of modelling atomic interactions. Still, training SchNet on the vast Hydronet dataset is computationally challenging – even using 10% of the dataset took 2.7 days to train using four nvidia V100 GPUs.

When PNNL researchers ran the same training exercise on Graphcore IPUs, they saw a dramatic speedup, with a Bow Pod16 taking just 1.4 hours to complete the same task.

Based on Paperspace published pricing for the two systems, those training times would have cost $596 on GPUs, compared to $37 for the Bow Pod16.

Computational chemistry is in its infancy, but the promise of modelling increasingly complex atomic interactions to predict their behaviours, and ultimately their utility, is huge.

Continued advancements will require not only further exploration of GNNs, but the sorts of cost optimisation made possible by Graphcore IPUs.

Try SchNet for yourself on Paperspace: (Training - preview)

Social networks and recommendation systems

As head of Graph Learning Research at Twitter, Michael Bronstein well understands the real-world manifestation of large, complex graph networks.

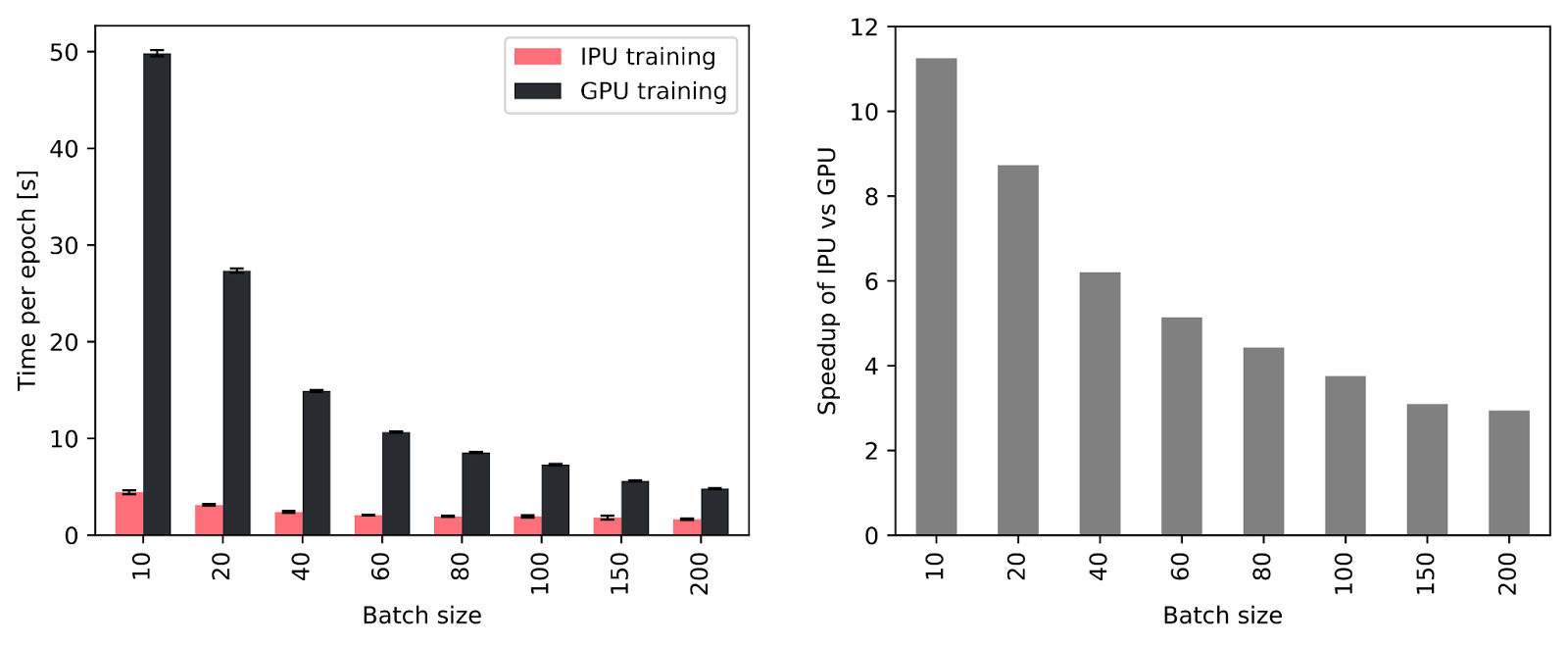

In his work with Graphcore IPUs, Professor Bronstein set out to address the problem that the most GNN architectures assume a fixed graph. However, in many applications the underlying system is dynamic - meaning that the graph changes over time.

This is true in social networks and recommendation systems, where the graphs describing user interaction with content can change in real-time.

One way of dealing with this is to use Temporal Graph Networks.

Professor Bronstein and his fellow researchers looked at small batch size TGNs, taking advantage of the IPU’s fast memory access from its on-chip memory. Throughput on the IPU was 11x faster than the state-of-the-art nvidia A100 GPU, while moving to larger batch sizes, the IPU was still around 3x faster.

Reflecting on the results, Professor Bronstein observed that many AI users may be missing out on huge performance because they are wedded to using GPUs.

“In the research community, in particular, the availability of cloud computing services abstracting out the underlying hardware leads to certain “laziness” in this regard. However, when it comes to implementing systems working on large-scale datasets with real-time latency requirements, hardware considerations cannot be taken easily anymore.”

Try TGN for yourself on Paperspace (training)

Journey time prediction and logistics

National University of Singapore

Researchers from the National University of Singapore decided to use Graph Neural Networks to tackle the perennial problem of predicting road journey times in cities with complex road systems.

In this case, nodes of the graph represented individual road segments, while the edges joining them represented the strength of the relationship between those segments – with directly adjoining roads having the strongest connection.

Traffic, of course, moves over time – so the NUS team added a further dimension to their GNN training to account for this crucial variable. The result was a Spatio-Temporal Graph Convolutional Network (STGCN).

The researchers then added a further layer of complexity by employing a Mixture of Experts (MoE) approach, employing multiple STGNs, with a gating network selecting the best one to use in any given situation.

The combined STGCNs and MoE approach turned out to be far better suited to Graphcore IPUs than other types of processor, according to Chen-Khong Tham, Associate Professor at NUS’ Department of Electrical and Computer Engineering: “It would probably be less straightforward to have a gating network and an expert network running on the GPU at the same time. For that, you definitely need an IPU, since the operations in the gating network are different from the operations in the expert neural network.”

Using Graphcore IPUs, the NUS team achieved speedups of between 3-4X compared to state-of-the-art GPUs – a level of performance that Professor Tham believes could deliver one of the holy grails of his field: real-time traffic predictions for an entire city.

Read more about Improving journey time predictions with GNNs on the Graphcore IPU

Get started with GNNs for IPUs in the cloud

Using IPU-based pre-built GNN notebooks in Paperspace cloud is the quickest and easiest way to start exploring graph neural networks on Graphcore IPUs.

Developers can take advantage of Paperspace’s six hour free IPU trial, while anyone looking to build a commercial application or simply needing more compute time can opt for one of Paperspace’s paid tiers.

A selection of other Graphcore-optimised GNNs is also available. To see the full list, visit the Graphcore Model Garden and select ‘GNN’ from the category list.