Graph Neural Networks (GNNs) are proving their utility across an ever-expanding range of applications, just as Graphcore’s IPU systems are demonstrating their standout capability for running these cutting-edge models.

One of latest challenges to which GNNs are being applied is the notoriously complex task of predicting traffic journey times in congested cities.

Researchers at the National University of Singapore (NUS) are using spatio-temporal graph convolutional networks with a Mixture of Experts (MoE) approach to deliver fast, accurate, large scale journey time predictions.

Training their models on Graphcore IPUs is delivering a significant speedup over both CPUs and GPUs, accelerating progress towards some of the field’s holy grails.

“If you want to have real-time traffic speed predictions for an entire city, as seems possible using a graph convolutional neural network, you can harness the speed-up on the IPU for near real-time traffic state predictions,” says research leader Chen-Khong Tham, Associate Professor at NUS’ Department of Electrical and Computer Engineering.

Traffic graph

Helping urban transportation to run more efficiently can yield economic benefits, improve the environment, and deliver quality of life improvements for residents – benefits reflected in the UN's Sustainable Development Goals for transport systems.

On a day-to-day basis, accurately predicting travel times helps commuters, couriers, first responders and others on the road network to better plan their journeys.

Where AI has been employed previously, it has typically been fed historical data on traffic delays across specific sections of road – a classical time series prediction task. However, this approach is relatively simplistic and omits some important factors that influence traffic movement, including conditions throughout the wider road network.

“The travel time on a particular road segment actually depends on the roads around that segment,” says Professor Tham. This means taking into account a spatial dimension, in addition to the time-series prediction.

The NUS team’s approach lends itself to modelling the road network in the form of a graph, where nodes represent individual road sections and edges show the strength of relationship between them, with adjoining roads having the strongest connections.

Input data consists of snapshots of traffic information at the road segments of the road network taken over time, and the road network graph.

The underlying machine learning network architecture used is the Spatio-Temporal Graph Convolution Network (STGCN) proposed by Yu, Yin and Zhu in 2018.

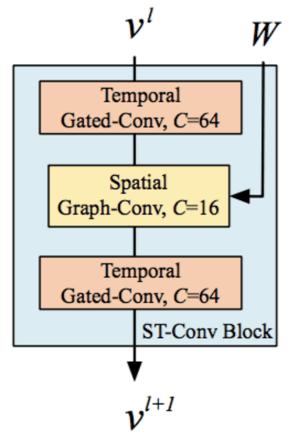

The "sandwich" of temporal-spatial-temporal processing layers

Spatial and temporal aspects are combined in what Professor Tham describes as a “sandwich” of pre-processing layers. The first layer, which extracts temporal features, is fed into a second layer that extracts spatial features. This, in turn, is passed on to another temporal layer.

The output of the three-layer sandwich is then fed into a standard multi-layer neural network to generate speed predictions for different road segments at various intervals in the future.

Mixture of experts

Due to the complexity of traffic prediction in large scale road networks, a single STGCN does not work as well as an ensemble of several models.

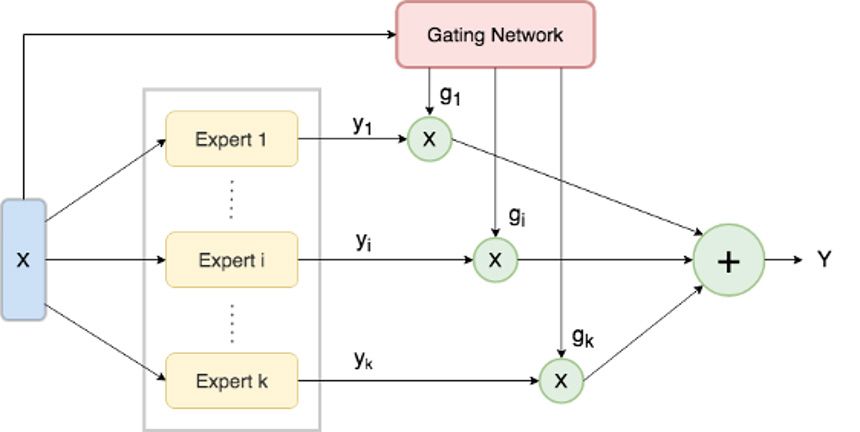

Mixture of experts is an ensemble learning approach, where a gating network learns which of several STGCNs to use under different conditions.

Mixture of experts and gating network

“Having, let’s say, four STGCNs, you increase the number of parameters 4X, training computational requirements increase 4X, memory increases 4X," explains Professor Tham.

In this case, the extra computational burden turned out to be worthwhile, yielding a 5-10% improvement in prediction accuracy.

IPU advantage

Professor Tham cites the IPU’s MIMD architecture as one of the reasons that Graphcore systems perform so well at mixture of experts STGCNs: “[The IPU] allows multiple instruction and multiple data to be processed on different tiles. This is very useful when you have operations that are not homogeneous.

“Because you are having multiple expert neural networks, parallelisation on multiple tiles and IPUs obviously helps in speeding up mixture of expert computations.

“It would probably be less straightforward to have a gating network and an expert network running on the GPU at the same time. For that, you definitely need an IPU, since the operations in the gating network are different from the operations in the expert neural network,” says Professor Tham.

The NUS researchers saw a speedup of between 3-4X going from GPUs to the Graphcore IPU. Read their paper and full results.

Reinforcement learning

Beyond traffic speed prediction using STGCNs with mixture of experts, the NUS team is looking at deep reinforcement learning where an intelligent agent can make recommendations about actions to take based on an analysis of the road network’s current state. These insights could help inform how cars should be driven, timing of traffic light signals, and other real-world actions.

This computationally intensive task has traditionally resisted acceleration because, according to Professor Tham: “the [simulated] environment in a deep reinforcement learning problem today is largely running on a CPU, and the intelligent agent runs on the hardware acceleration platform.

“There is an I/O bottleneck between the CPU and the hardware acceleration platform.”

One way around this would be to run the simulated environment on the hardware acceleration platform – a solution which particularly suits Graphcore systems.

“In this case, the IPU has advantages again because of the MIMD paradigm,” says Professor Tham. The environment can be in different states. It doesn’t need to be synchronised with other environments under consideration and we can use this capability of the IPU to explore multiple environments concurrently.”

Professor Tham and his research team are using IPUs as part of the Graphcore Machine Intelligence Academy.

References:

R Chattopadhyay and CK Tham, “Mixture of Experts based Model Integration for Traffic State Prediction”, IEEE Vehicle Technology Conference (VTC) 2022, Helsinki, Finland, June 2022.

B. Yu, H. Yin, and Z. Zhu, “Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting,” in Proceedings of the 27th International Joint Conference on Artificial Intelligence (IJCAI), 2018.