Every day, big ideas in machine intelligence are being brought to life in research labs, business and universities. The outcomes are changing our world for the better.

We are already seeing AI help in the discovery of life-saving drug treatments for serious medical conditions. Autonomous vehicles are benefitting from advances in computer vision and intelligent decision making, and epidemiologists have used Graphcore technology to help predict the spread of Covid-19.

Having the right tools is an essential part of that discovery process, and the more people that have access to leading-edge AI compute, the more breakthrough applications will be developed.

Graphcore’s new IPU-POD16 DA (Direct Attach) is a powerful, yet compact and affordable system that provides the perfect on-ramp for innovators to explore new machine intelligence approaches with IPUs.

The IPU-POD16 DA allows teams to move from proof of concept projects to pre-production pilots, demonstrating higher performance and TCO advantage for both training and inference workloads.

The system delivers an incredible 4 petaFLOPS of FP16.16 AI compute, courtesy of its four IPU-M2000 blades, directly attached to a Graphcore approved host server.

Its compact 5U form factor offers excellent compute density in the datacenter and, thanks to the modular nature of the IPU-POD16 DA, the core IPU-M2000 building blocks and host server can easily be re-configured as part of larger, switched IPU-POD systems in the future.

Performance

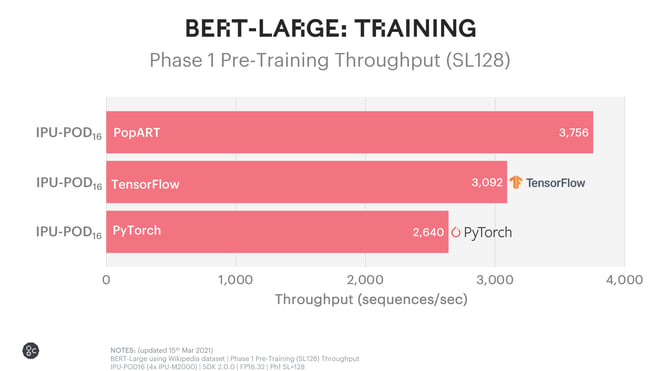

New benchmarks demonstrate the power of the Graphcore IPU-POD16, running some of the most widely deployed training workloads on TensorFlow, PyTorch and Graphcore’s native PopART:

Better by design

Each IPU-POD16 is powered by 16 Graphcore GC200 IPUs, providing a total of 23,552 independent, parallel processing cores, capable of running 141,312 threads.

This enables the IPU to perform fine-grained, parallel compute - a crucial capability for handling AI data and data structures which can be both irregular and sparse.

The IPU-POD16 DA’s processing power is complemented by Graphcore’s high-performance Exchange-MemoryTM. This includes a total of 14.4GB of in-processor memory, evenly distributed across the 23,552 cores. Each core has its own block of memory that sits adjacent to it on the silicon - a unique feature of the IPU architecture that enables memory access with a bandwidth of 180 TB/s. In-processor memory works hand-in-hand with the IPU-POD16's 512GB of streaming memory.

Communications across the IPU-POD16 DA take place across IPU-Fabric, our jitter-free communications fabric that extends inter-IPU connectivity across the entire system, with a bandwidth of 2.8Tbps.

Putting users in control of the IPU-POD16 DA and unleashing the power of this new hardware configuration is Graphcore’s Poplar SDK. Poplar attends to crucial functions such as scheduling compiled communications and compute, integrating with popular machine learning frameworks such as PyTorch and TensorFlow, as well as enabling users to program directly in Python and C++.

Our open-source PopLibs Poplar libraries provide optimisations for the most widely used model implementations, with an active machine learning community contributing to updates.

In cases where customers wish to allocate the IPU-POD16 DA's compute resource to more than one person or task, Graphcore’s Virtual-IPU enables multiple users and workloads.

Taken together, this powerful new hardware configuration, enabled by Poplar, represents an incubator for innovation. There’s a good chance that the ideas which define next generation machine intelligence will begin on an IPU-POD16 DA.

IPU-POD16 DA systems are shipping in production today and are available to order from Graphcore elite channel partners globally. To speak to sales or to find out more please contact us.